TVN Tech | AI Gains Traction In Captioning Market

While many broadcast television operations today are fully automated, closed captioning is one function for many stations that still relies heavily on people. To generate text that relates program dialogue and other key audio to hearing impaired viewers, hundreds of stations continue to use stenographer- or respeaker-based captioning services with a human being hearing the audio first. Accuracy is cited as the major reason for sticking with the manual workflows, particularly for fast-paced live programming with multiple speakers like a newscast or sporting event.

However, AI- and cloud-based captioning systems from players like ENCO, Voice Interaction, IBM Watson Media, TVU Networks and Digital Nirvana are steadily improving their accuracy and beginning to apply competitive pressure to these legacy manual workflows. The big driver is cost, as an automated or cloud-based captioning system can cost half as much, or less, on a per-minute basis as operator-driven legacy systems.

With some broadcast playout and production functions starting to move to the cloud, the latency of AI-based captioning also may be less of a concern. Many cloud video workflows have an inherent latency themselves that is greater than the delay associated with cloud-based captioning, and the timing can be managed so the end result is synchronized for the viewer.

IBM Watson Media, which provides a cloud-based captioning service, studied the potential savings of automated captioning for one station group. The group had more than 32,000 closed-captioning hours per year, with 17 stations producing news and 168 hours per month per station. With an average cost for captioning services of $85 per hour, the group’s current closed captioning costs were $8.2 million over three years. Moving to Watson captioning at $0.70 per minute, the group stood to save $4.2 million over three years based on the cost of a 36-month contract.

Captioning is a necessary cost of doing broadcast business. Stations are required by the FCC to provide captions for the bulk of their broadcast days, with commercials and latenight programming being two big exceptions. Those captions are supposed to not only accurately match the program audio, including non-verbal information like speaker identification and proper punctuation, but also be synchronized to match the program audio as much as possible; be complete, running from the start to finish of a program; and must not be placed to obscure important visual content such as graphics.

Vitac’s Doug Karlovits

Given the complexities involved, the FCC hasn’t put an exact number on captioning accuracy. But broadcasters are generally shooting for 99.9% accuracy on recorded programming, where captions are generated and placed as part of the post-production process, and over 98% for live programming like news, says Doug Karlovits, chief business development officer for leading vendor Vitac.

“Our customers take that seriously,” Karlovits says.

Vitac has about 500 stations as customers and more than 1,500 active customers overall. The company, which is based in Greenwood Village, Colo., with an operations center in Canonsburg, Pa., captioned more than 600,000 hours of programming last year, with about 550,000 hours of that being live. Vitac’s live captioning is performed by a distributed national workforce of over 500 employees located in all 50 states; COVID-19 didn’t have a major impact on day-to-day workflows since most of Vitac’s captioners were already working from home.

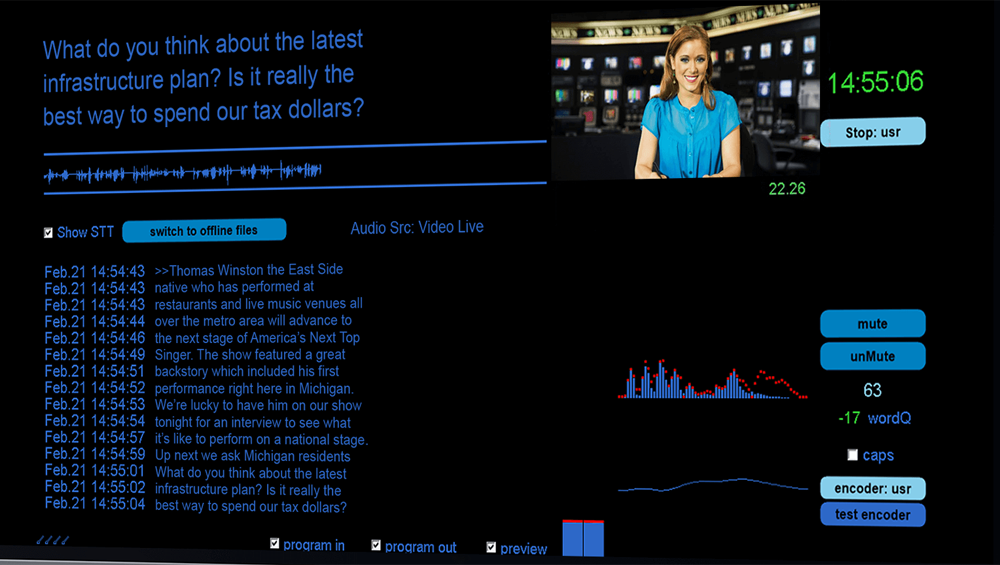

Automatic Speech Recognition

They include both steno captioners and captioners who use “respeak” technology — they listen to an IP feed of the program audio and then repeat it into Automatic Speech Recognition (ASR) software which is trained to their particular voice. The ASR then translates their voice into text.

In either case, the live captions are then sent back to the station, where an encoder ingests the caption data and embeds it into the broadcast signal; Evertz, EEG and Lynx are leading encoder vendors. The process takes about three to five seconds.

Vitac has been using ASR since about 2012 and dramatically expanded its use of the technology after its 2017 acquisition of Caption Colorado, which had a number of respeaker captioners. Karlovits says that ASR keeps improving but isn’t enough by itself to guarantee accurate captions, while the respeaking workflow is highly accurate.

“Just to put out text [from ASR] doesn’t mean it’s always understandable to humans,” he says. “With unattended ASR, you’re taking the audio and using text and algorithms to try to figure out what the next word is going to be.”

A different point of view is offered by ENCO, which sells a fully automated ASR-based captioning system called enCaption. ENCO’s automated captioning system can be as little “a tenth of the price” of traditional live captioning services, says President Ken Frommert. The enCaption system, which uses on-premise hardware that customers lease as part of a monthly service, varies in price from $10 to $30 an hour based on usage. Frommert says a medium-sized station producing 30 hours a week of news might spend a “couple of thousand” dollars per month for the service.

ENCO’s enCaption system

Frommert says a “couple hundred stations” already use the ENCO solution, which has an accuracy in the “high 90s” and a latency of 2 to 4 seconds. While the system uses machine learning to improve its word models, Frommert says that ENCO’s ASR is “speaker-independent” and doesn’t require training the system in the voice patterns of a particular person. That is particularly important when reporters are doing live interviews from the field.

“Machine learning has brought us up to a whole other level of quality with less latency in the last few years,” Frommert says.

One of the ways the ENCO system gets trained is through a MOS integration with all of the leading newsroom systems. That allows it to grab scripts from the station’s rundown and train the system in advance of the live newscast. Unlike the lower-performance Electronic Newsroom Technique (ENT) that is used by many stations outside of the top 25 markets, however, ENCO doesn’t simply generate captions directly from the news script. Instead, it listens to the live audio feed and generates captions on the fly.

“We don’t follow a script, but we do give more weighting to words that you were going to use in the script,” Frommert says. “That improves the spelling of proper nouns and what have you.”

Dynamic Segments Challenge Captions

Whether using an ASR-based system like ENCO or simply relying on ENT, captioning scripted stories within a newscast is easy compared to captioning dynamic segments like traffic reports or live interviews from the field. That’s why many stations that can afford it still pay for live captioning services to handle those three to four minutes of unscripted time, while some small-market stations using ENT simply let that time go uncaptioned.

IP transmission vendor TVU Networks, which transmits live reports from the field with its bonded-cellular “backpack” systems popular with many stations, is looking to provide a cost-effective solution. The company has added a feature called TVU Transcriber to its TVU MediaMind content management service that is designed to provide captioning for any content flowing through customers’ TVU Transceiver servers.

TVU Networks’ Paul Shen

Since TVU MediaMind was already using cloud-based AI to index content transmitted through the system with speech-to-text and facial recognition algorithms, it was easy to leverage that technology to generate captions for those remote feeds, says TVU Networks CEO Paul Shen.

“What we said is, our system is intelligent enough to seamlessly insert the missing closed captioning,” Shen says. “We can fill in the four minutes of missing captions and do it at very high quality.”

IP transmissions through TVU generally have a natural latency of one to two seconds due to video compression, and TVU Transcriber’s cloud-based captions are delivered within that timeframe, meaning that they arrive in synch with the video and audio. Shen says the system has over 95% accuracy and works in conjunction with stations’ existing captioning systems to detect where captions are missing and instantly generate them and embed them in the feed.

TVU Transcriber doesn’t require any new hardware, and customers are charged only for the time that the system actually provides captions. Shen says the effective cost works out to about 50 cents per minute.

“That’s why it’s so convenient for the customer,” Shen says. “They drop it in and it just works.”

A new entrant in the automated captioning market, Ericsson subsidiary Red Bee Media, comes from the traditional captioning world. The U.K.-based firm manages playout, captioning and other services for the BBC, along with other major international broadcasters, and captions some 200,000 hours a year. About half of that is live captioning performed by respeakers using ASR software.

Red Bee has been using ASR for about 15 years, and the results are very good, says Tom Wootton, head of access services product for Red Bee Media. But ASR alone hasn’t been good enough to provide true accessiblity to hearing-impaired viewers, until recently.

“What we’re seeing now over the past two to three years is a big increase in compute power and machine learning capability,” Wootton says.

Those improvements have changed Red Bee’s thinking about ASR. The company has now developed its own fully automated product, ARC (Automatic Realtime Captioning), which it is aiming at new markets including U.S. broadcasters and budget-conscious broadcasters everywhere.

ARC is sold in a Software as a Service (SaaS) model, with customers paying based on usage. If a human-driven caption service might cost around $75 dollars per hour on the low-end, Wootton says, the automated product will likely cost half of that.

“Our pitch will be that we want to halve your accessibility bill,” Wootton says. “That’s how we approach it.”

Red Bee’s Tom Wootton

Red Bee Media had already moved the processing of its captioning services to the AWS cloud before COVID-19 hit. That made shifting to remote workflows relative straightforward when lockdowns hit the U.S., Europe and Australia, and all of its captioners are now working from home.

Having all of Red Bee’s captioning services on the cloud also makes for an easy link between the legacy services and its new automated product. Red Bee can use its experienced captioners to help train the ARC system to recognize important words, such as local street names or topical words like COVID-19. Big improvements in quality for automated captioning can be achieved by using captioners to “seed” the system with a vocabulary curated for particular shows, Wootton says. That helps the ASR software recognize the difference between “lettuce” and “let us” in a cooking show, for example.

“What we do is apply a light operational layer to the automation to raise the quality bar,” Wootton says. “We’re finding the automation and traditional services are actually synergistic and come together quite nicely.”

Comments (0)