AI Is A Key To Automated Closed Captioning

For nearly two years, Gray Television has been evaluating automated closed captioning technology at its lab within WOWT Omaha, Neb., and in that time it has seen significant progress in speed and accuracy as systems incorporate the latest in AI and machine learning.

“A year ago, 80% to 85% accuracy is what we were looking at, and what we’re seeing now is pushing 95% accuracy,” says Gray’s Mike Fass, VP of broadcast technology.

Because machine learning is a cumulative process, “you can’t just test it a couple of hours and sign off on it. You need to run it for a while to get a gauge for it,” Fass says. The longer it’s used, he says, the more it learns.

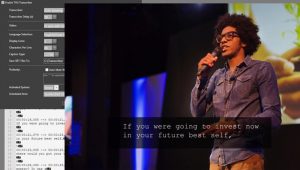

The TVU Transcriber in action. The top left window is where the user can turn on and off the service. The bottom left is the AI based speech recognition output, and the video is result output video with closed captioning from the TVU Transcriber service.

Systems getting tryouts at Gray include ENCO, EEG, Comprompter, TVU Networks, Voice Interaction and The Weather Company. Digital Nirvana is also in the market.

Fass says it’s hard to say when he’ll declare a winner. “We want to see the accuracy at 100% and we’re seeing these systems get closer.”

Fass is not alone in keeping an eye on captioning technology. All broadcasters caption because all broadcasters must.

To accommodate the hearing impaired, the FCC requires stations to caption news and other live programming that they broadcast with minimal delays between the audio and captions. Also, the rules say, the captions must be included if the same programming is streamed on a website or app.

Some broadcasters like Gray would like to go beyond the regulatory mandate and caption all the programming they put on digital media.

Broadcasters see other benefits to automated captioning. It can be used it to facilitate editing and production of scripts as well as for generating searchable metadata for archived video.

Automation can also help reduce latency. Human captioners typically take four to six seconds, and some words may be lost. Automated system may get that down to as little as two seconds.

Broadcasters also expect to save money by replacing human captioners with machines.

The math on automation is compelling, according to Paul Shen, CEO of TVU Networks, one of the captioning vendors.

For example, a half-hour newscast will be mostly scripted, with about four minutes unscripted for traffic or weather. That means someone will need to be present to perform live captioning services for only a handful of minutes, he says.

“You don’t just pay for four minutes of time. Someone has to be sitting there, waiting for the newscast to start to begin to transcribe,” Shen said. “You have to pay for a full hour just for four minutes.”

The automated systems are clearly faster and less expensive, but they still have to overcome several problems, including latency, voice differentiation, people speaking over each other, noisy backgrounds and even punctuation.

“If the presenter is talking and pauses briefly, the systems will add a period and start a new sentence. It’s choppy and hard to read.” says Fass. “We’re waiting to see that get resolved.”

João Neto, CEO of VoiceInteraction, says autocaptioning of “a good speaker with a good microphone in studio” can reach accuracy levels of 95% to 100%.

But accuracy will drop for interviews in the street or during sporting events where there is background noise and speech is off the cuff, he says.

“People talk over each other, and when people talk over each other, it’s very difficult for artificial intelligence to understand who’s talking about what,” says Shen.

With the help of AI and other underlying technology, vendors are addressing the problems, but they will take time to fully resolve.

“I’ve heard people say they want to use voice recognition technology, but I don’t think we’re there,” says Hiren Hindocha, CEO of Digital Nirvana, whose automated closed captioning services are in use by two major U.S. cable and satellite networks and one Canadian broadcaster.

Voice recognition still struggles with proper nouns and product names it hasn’t encountered yet,” he says. “For live news, I think that’s still a couple of years off.”

Hindocha says the key in voice recognition and the first big breakthrough on that front came after looking at “tons of data” to determine statistically what word should come up next. For example, he said, the word “how” is most often followed by “are you.”

Also helping has been general purpose voice recognition, such as that in use by devices like the Amazon Echo, he says. “It doesn’t really know what you’re going to ask. It’s not perfect, but it’s getting better and better at what it does.”

Ken Frommert, president of ENCO, agrees with Hindocha. “It’s all statistics. Everything about this is based on statistics,” Frommert says. “It’s the neural network approach of speech to text.”

Machine learning is at the heart of the various automated systesm, but they may operate in different ways and they have different users interfaces and features.

ENCO’s enCaption4 technology connects to the newsroom system to pull scripts, which helps with spelling names correctly, and it pre-processes audio to strip out all noise except human speech, Frommert says. The system can be used on-premises or in the cloud.

ENCO’s technology doesn’t require training with individual voices, Frommert says.

VoiceInteraction’s Audimus.Media can handle multiple languages. “We are looking to improvements in technology to get better results, better accuracy and to be faster,” Neto said.

TVU Networks’ captioning service is part of its AI-powered newsroom platform. The TVU Transcriber service is on-premises and works through the TVU Transceiver.

Digital Nirvana’s technology traces its roots to the financial world, for which the company provided transcripts of earnings calls. In 2016, the company leveraged speech-to-text technology to provide cloud-based automated closed captioning to the broadcast industry.

A new app, Metadator, makes it possible to automatically create metadata. Both technologies use cloud-based speech recognition technology.

Part of the allure of cloud-based technologies, Hindocha said, is that it’s easier for broadcasters to integrate the services into the workflow than it is with technologies that require dedicated hardware.

Comments (1)

tvn-member-9348876 says:

November 1, 2018 at 1:43 pm

I am glad to read Mike Fass’ statement that they are looking for 100% accuracy from these automated captioning systems. Being married to a sign language interpreter who is also an advocate for the deaf, I cannot tell you how important it is to get closed captioning correct and accurate. I have had to explain on numerous occasions why the captioning on a program was inaccurate or missing. We cannot accept 80% to 90% as anywhere near acceptable. Missing 1 or 2 words out of every 10 would be intolerable for the hearing community and is equally intolerable for the deaf.