AI Makes Big Progress In Broadcast Workflows

A big theme at last month’s NAB Show in Las Vegas was using artificial intelligence (AI) and machine learning (ML) to help speed the production process. While some of the focus on AI could be attributed to mainstream buzz over new generative AI tools like OpenAI’s ChatGPT, broadcast vendors have been working on AI-based products for several years to tackle the more labor-intensive parts of the broadcast workflow. At NAB, vendors ranging from Adobe to Vislink showed new AI features that can dramatically simplify everyday tasks in news and sports production.

Several NAB conference sessions were also dedicated to AI and ML, including a panel session at the Devoncroft Executive Summit, “Transforming Internal Operations — Driving Business Value Through Automation, ML and AI,” where executives from Warner Bros. Discovery, NBC News and Fox Sports discussed their company’s own AI and ML initiatives.

David Sobel, VP of media management for Fox Sports, described how Fox began working with Google Cloud in 2019 to create a new asset management system to automate the process of logging, discovering and storing video assets. The goal was to create a web-based, deployable, scalable asset management system that was file-agnostic, Sobel said, to “tear down our workflows and say: ‘Here’s your one place for media — whether it’s live media or features, you go here.’”

Fox moved 20 petabytes of content to a cloud-based archive that holds some 1.6 million assets. In the process, it digitized 50,000 tapes dating back to 1994, from the network’s first years of NFL, college football and MLB coverage.

But centralizing Fox’s assets was only the first step. The second was to create powerful search functionality that wouldn’t just deliver standard results, like a touchdown from a particular NFL game, but quickly find what Sobel calls the “hard to find things.” Fox knew that ML was the answer, and it partnered with Google to train ML algorithms on thousands of hours of sports footage.

“Not just a catch or a tackle, that’s easy,” said Sobel. “But eyes, emotions, frustration — these sort of things that people spend hours searching for.”

The result was a unified search database that quickly gives a producer or editor the footage they need. It has already been successfully used over the past year for Fox’s NFL, FIFA World Cup and 2023 Super Bowl coverage. Sobel gave a theoretical example using the Super Bowl, where an analyst might mention that Kansas City Chiefs head coach Andy Reid had previously mentored quarterback Brett Favre when he served as offensive coordinator for the Green Bay Packers. Without knowing the exact year, a production assistant could go into the Fox asset management system, search for “Andy Reid” and “Brett Favre” and within seconds get 10 to 15 shots of Reid as Favre’s offensive coordinator.

“Now you’ve got a choice of shots,” Sobel said. “It’s been able to pull it, and you can make your content better and more intriguing, because you’re not just settling for the very first thing you find.”

AI For Editing

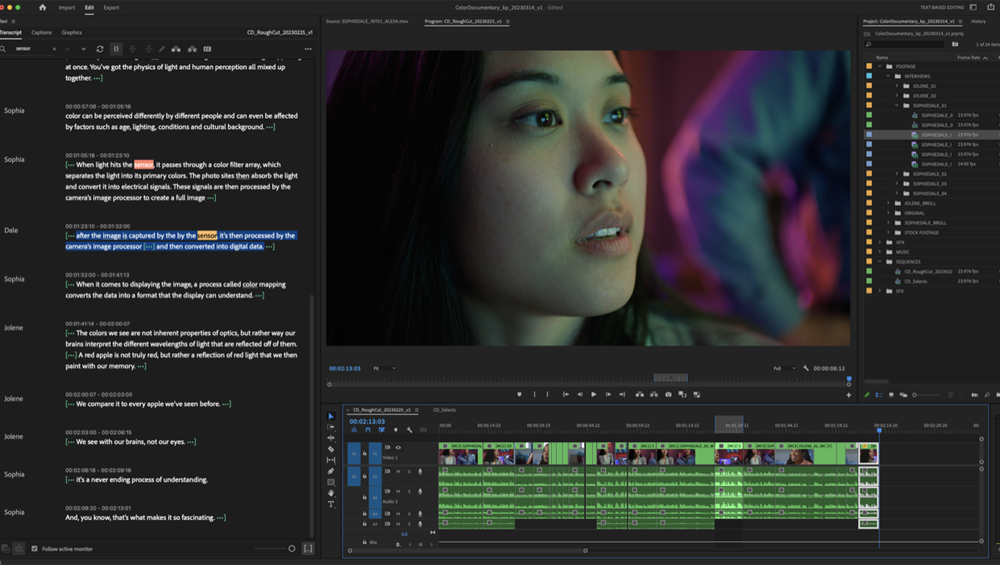

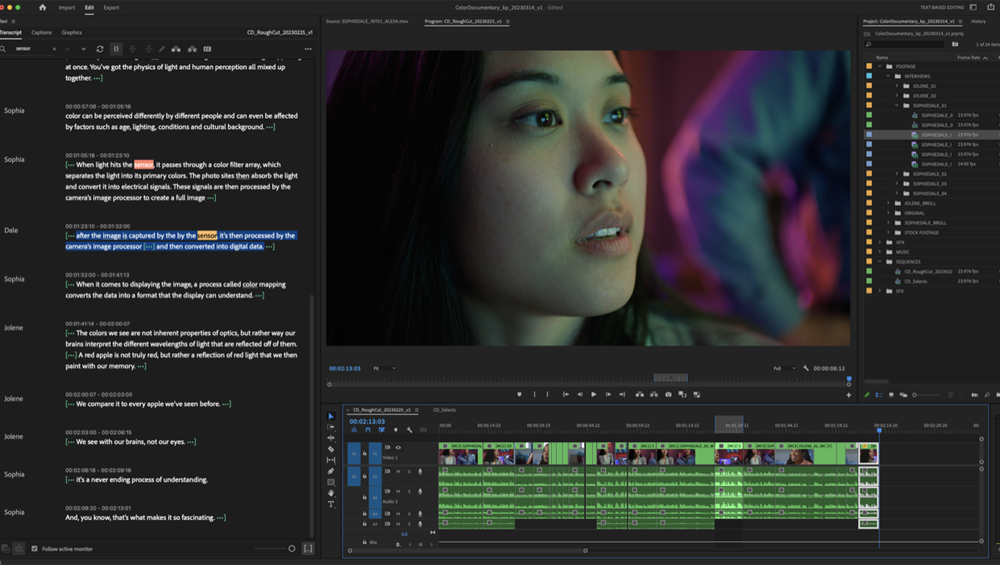

Adobe’s Sensei AI/ML engine has been around for a decade and powers many existing features in Adobe’s Premiere editing applications. At NAB, the company introduced text-based editing, in which the audio in news feeds is automatically transcribed upon ingest and displayed in a transcription window to the left of the editing interface. The AI even recognizes different speakers and notes it in the copy, allowing an editor to insert an ID. Then by highlighting a selection of text, the software will then dynamically make a rough cut of a story.

“Once you find the lines you want, you can literally copy and paste the text, and it will build your rough cut for you,” said Paul Saccone, Adobe senior director of pro video marketing.

The transcription time is negligible, Saccone said, and takes only a few seconds for an average-length news clip. It can also export a transcript for use in other applications, such as a newsroom computer system.

The Adobe software, which runs on on-premise computers, actually produces two different transcripts: a source transcript with audio from the source footage; and then a sequence transcript, which is formed once an editor starts placing clips in a timeline. Once a timeline is built, the editor can go into the sequence transcript and if they decide they want to change the order of the lines, they simply copy and paste the text. The clips will then automatically shuffle in the timeline.

“We’re calling it an entirely new way to edit,” Saccone said. “Because it literally makes creating a rough cut as fast as copying and pasting text, because that’s exactly what you’re doing. It’s a great tool for an assistant editor, and it’s a great tool for producers, who might have previously made a ‘paper cut’ by printing out a transcript and marking it up. That usually would have required running the audio through a transcription service and then printing it out. Now that work can all be done directly within the application.”

The AI also puts brackets in the transcript to indicate pauses in the audio and an editor can set the threshold for how long the pause should be. They can then go back and delete the brackets, thus cutting the relevant video and tightening up the package. Once the package is complete, the AI system automatically generates closed-captioning for the story based on the transcript.

“It’s really an end-to-end workflow, all the way from ingest to output,” Saccone said.

Other AI features in Premiere include automatic tone-mapping, for smoothly mixing HDR and SDR content in an edit; and automatic reframing for social media, to put the video into a square or vertical format. Once an editor selects the required shape, the software automatically identifies the subject and frames the video accordingly.

At NAB the company also announced Adobe Firefly, a new set of cloud-based generative AI models. So far Adobe has trained its Firefly models on still images, specifically Adobe stock, publicly licensed images or open source material, to provide a generative AI model for Photoshop and Illustrator that produces content that is safe for distribution without infringing on any copyrights.

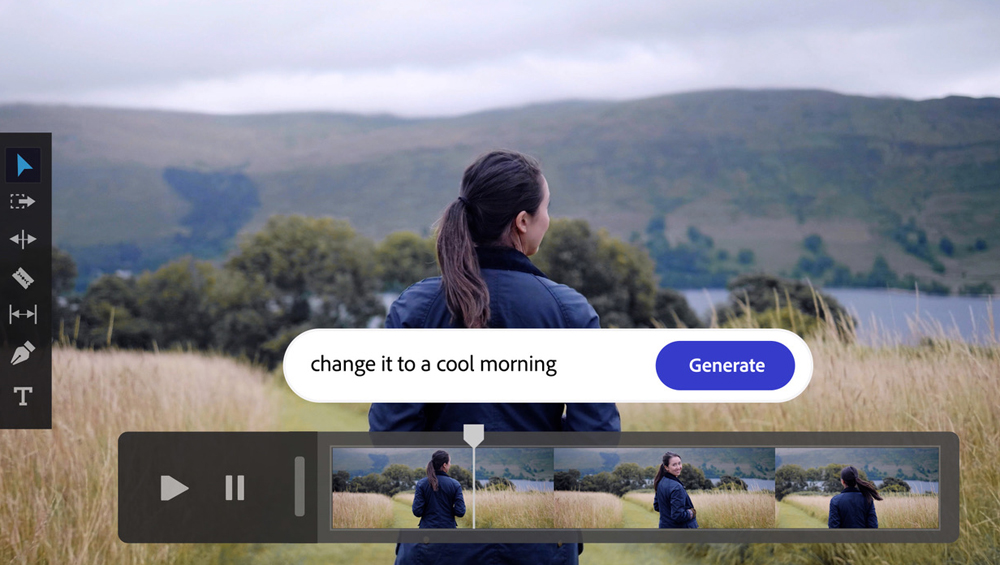

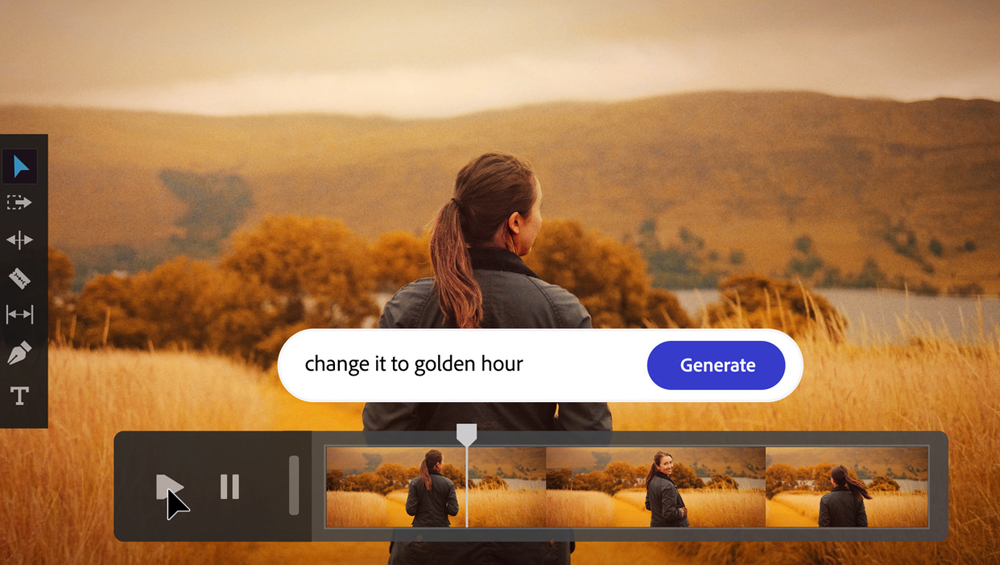

Adobe is also working on generative AI in video, though there are no product announcements yet, Saccone said, just a “vision.” He thinks generative AI may be helpful in doing color correction, such as taking a piece of footage and make it look like “Golden Hour” or “Cold Morning.” Adobe is also considering how metadata coming from cameras can be married to a script to help create storyboards or rough cuts of a package.

Examples of Adobe’s AI-based color correction concept.

“We’re trying to take some of the really common things that people do, and make them easier and assist people,” Saccone said. “The goal is not to replace an editor or a colorist as much as it is to help people realize their creative intent faster.”

“We’re trying to take some of the really common things that people do, and make them easier and assist people,” Saccone said. “The goal is not to replace an editor or a colorist as much as it is to help people realize their creative intent faster.”

The buzz over ChatGPT means that Adobe has recently been getting a lot of questions about AI from customers. But Saccone said the company already had Firefly under development last year. “We knew generative AI was going to be a thing, so we’re a little ahead of the curve on that.”

More AI-based production tools were being shown by IP transmission vendor TVU in its West Hall booth. TVU Search, an AI-based search engine that uses algorithms from Google, sifted through incoming live feeds in TVU Grid, the company’s cloud-based content switching, routing and distribution system. TVU’s “MediaMind” AI tool automatically performed speech-to-text captioning of the audio on incoming feeds and generated a real-time transcript, which allowed for an easy keyword search of people, places and things.

“MediaMind” also performs real-time facial recognition on incoming video, and this can also be set as a parameter in Search to quickly find video of public figures. In the demo, clicking on the face of President Biden in an incoming feed prompted the search engine to find all other video of the president available in Grid.

When reviewing search results, a TVU Search user could also perform a quick edit by selecting a clip in the timeline and allowing a video preview window and adjacent “speech recognition” window with the real-time transcript to load. By simply highlighting text in the speech-recognition window and clicking a “Clip” button on the user interface, the Search system automatically grabbed the corresponding chunk of video to create a clip.

AI In The Playing Field

EVS has its own team of dedicated replay specialists, and at previous NAB Shows has demonstrated using AI to direct coverage of a soccer match. At NAB 2023, it was showing the latest features for XtraMotion, an AI-based service that can create super-slow-motion replays from any live footage, as well as standard-frame-rate post and archived content.

The XtraMotion service uses interpolation algorithms to generate high frame rates (up to 3x real time) from conventional frame-rate cameras. The replay can then be played back in a matter of seconds from an EVS replay server. Since debuting in 2021, it has already found its way into several high-profile sporting events, including Fox’s coverage of this year’s Super Bowl.

XtraMotion significantly expands the number of camera positions that can deliver super-slow-motion replays in sports production, said EVS Chief Marketing Officer Nicolas Bourdon. For example, specialty cameras with low-bandwidth connections, such as the compact “pylon cams” regularly used in college and NFL football coverage, haven’t been able to deliver high-frame-rate video. XtraMotion solves that problem.

“A director would never generate replays from those cameras,” Bourdon said. “This gives them more possibilities. Now to show a replay they don’t need anything but AI.”

The system can run on the public cloud or on-prem compute. Bourdon said most XtraMotion customers still want to use on-prem compute for live productions.

XtraMotion allows replay operators to simply clip any content from anywhere on an EVS XT network, render it to super-slow-motion and play it back seconds later from their replay server.

Brady Jones, a freelance replay operator who regularly works on NFL productions, demonstrated in the EVS booth how the creation of an XtraMotion replay can be started at the beginning of a play and shown immediately after. In his demonstration, he took recorded footage of a gymnast running to perform a vault. Immediately after she landed, Jones was able to play a super-slow-motion replay of the exercise.

“If it exists on XT, it’s there [for a replay],” Jones said.

Wireless transmission specialist Vislink demonstrated AI-led live production with its IQ Sports Producer (IQ-SP) system, with a PTZ (pan-tilt-zoom) camera automatically zooming in and out to follow cyclists racing around a velodrome (a banked indoor track). The system can be used for a variety of sports including soccer, ice hockey and basketball. It supports the insertion of graphics and overlays, such as a scoreboard. The AI can also automatically detect highlights and clip them to create a summary, or highlights reel, at the conclusion of an event.

IQ-SP, which became part of Vislink’s product portfolio with its 2021 acquisition of Mobile Viewpoint, was originally launched with a panoramic camera. It used AI to track the action and perform digital zoom, by finding a player, cyclist or the ball and then making a cutout of the panoramic image and zooming in.

Michel Bais

While customers were impressed with the speed and accuracy of the AI, said Vislink Chief Product Officer Michel Bais, most didn’t think the picture quality was good enough to take to air in a linear broadcast. That relegated IQ-SP mostly to streaming and mobile applications, except for a few niche sports like dog racing where the quality of the panoramic system was adequate.

“The problem with that solution is it’s not sharp,” Bais said. “It’s only sharp at one location.”

Vislink still thought there was great potential in using AI in higher-end sports production, such as second-tier professional soccer leagues, to replace manned camera positions and reduce costs. The concept wasn’t to do a full AI production but instead to take a seven-camera shoot and bring only two cameramen, with the remaining five cameras being controlled by AI. Over the past two years Vislink had seen an increased use of PTZ cameras that were being remotely controlled by operators using a joystick, and Bais thought AI could provide a more elegant and cost-effective solution.

So the company decided to invest in a system that could control PTZ cameras with broadcast-quality optical zoom. A simple overview camera now delivers video to the AI engine, which then steers the PTZ camera. The AI engine can run in the cloud, provided there is good connectivity from the venue, or in an on-premise server.

Early feedback on the improved IQ-SP system has been positive, Bais said. An Olympic-level cycling coach is already using the system on a daily basis to remotely monitor his cyclists’ training sessions, and the system will soon be used for horse racing coverage.

Vislink’s IQ-SP AI-driven production system with a PTZ camera.

Overall, Bais sees great potential for AI in sports, where most of the camera work is done from the same few positions, game after game. He thinks AI will be particularly useful in automatically creating highlights and game summaries, such as provided in IQ-SP, as they have become more important than the full-length games themselves.

“People want more and more sports on TV, but the amount of hours is still 24,” Bais said. “So you have to squeeze.”

AI-Based Ads

A new player in the television space whose core product is based on AI is Waymark. The Detroit-based company has developed a system that uses generative AI (including OpenAI’s GPT-3) to search the Web for information and images on a business and then automatically generate a 15- or 30-second television spot, including voiceover, based on what it finds.

Alex Persky-Stern

Waymark’s AI is composed of a “mix of different models” which it uses to scour the Web for freely available information on a company, said CEO Alex Persky-Stern, using the AWS public cloud. The AI has been trained on hundreds of different visual styles, text scripts and images to allow it to produce spots comprised of still images, video, music, text graphics and a voiceover performed by a synthetic voice.

A demonstration at Waymark’s NAB booth showed how the software could quickly produce a spot on a local bagel shop. The first step was to simply type in the name of the business. The software then scoured the web for any usable assets that were already online, such as on the company’s website or social media profiles, including logos and still images of menu items. It then produced a 30-second spot with a corresponding script, and text graphics and voiceover accurately matched the images on screen.

Waymark was originally marketing its product as a sales tool that local stations could use to show prospective clients what a television spot would look like. But the AI has proven good enough to deliver a finished product in less than five minutes that they can take to air.

“In practice we see people doing both,” Persky-Stern said.

For example, since February Waymark has been providing AI-generated spots for Spectrum Reach, the advertising sales business of cable operator Charter Communications. Persky-Stern said that 40% of the Waymark-generated spots that Spectrum Reach pitches to prospective clients actually make it to air.

Waymark is selling its technology on a station-wide or enterprise-wide basis with different pricing arrangements. A base package for an individual station might include 15 fully rendered go-to-air spots a month for a flat rate, Persky-Stern said, with an unlimited ability to generate demonstration spots for pitching purposes.

Earlier this month it signed a deal with station group Gray Television, which is rolling out the Waymark generative-AI technology across all of its stations. Gray President and co-CEO Pat LaPlatney said that local sales teams are already using the AI technology to approach small business prospects with customized spots.

Comments (0)