TVN Tech | Artificial Intelligence Offers Real Benefits

With more broadcasters adopting artificial intelligence (AI) technologies to automate mundane tasks, vendors are developing novel ways to improve the production process and the viewer experience.

During the Super Bowl, one vendor carried out a proof of concept project using AI technology to deliver smooth slow motion without using a super slow motion camera. That vendor is also using AI to operate offside cameras for soccer games.

AI makes it possible to streamline the highlights process via autoclipping, and it can be used to automate the enhancement of metadata on existing content in an organization’s media library, one vendor says. It can also help broadcasters monetize content through ad insertion.

Reporters can harness the power of AI to increase the speed at which they conduct research and vet information through one vendor’s offering, while another vendor is focused on indexing individual frames of content and making it discoverable in real time.

AI is not poised to replace people, but it is changing the way broadcasters carry out a number of tasks.

Super Slow Motion

Some significant breakthroughs in AI technology “allow us to understand image content in a way that was not possible before,” says Olivier Barnich, head of innovation at EVS. “Ten years ago, it was not possible to recognize faces and chairs and television sets in every single image like we can do now.”

Those breakthroughs have led to a “deep understanding of audio, video and metadata content,” he says, and EVS is working to leverage that understanding.

During the Super Bowl, Barnich says, EVS carried out a proof of concept for Fox on a super slow motion project. Typically, to achieve slow motion, frames are repeated. To slow down time by a factor of three, the frame is repeated three times. But there’s no fluid transition between the last repeated frame and the new frame. EVS has developed a replay technology to create a new frame that smooths out the viewing experience of super slow motion plays.

Barnich says the technology uses machine learning and a learning algorithm and draws on techniques used in teaching facial recognition. For a sporting application, he says, it comes down to teaching the system to generate an image from the image before it and the image after it.

“It learns how the video moves between image one and image three to generate image two,” he says.

The AI super slow motion technology is not yet possible in real time, he says, but EVS is working to minimize the processing time so it will be useful in a live environment.

EVS used AI technology during the Super Bowl to create new frames for super slow motion replays to smooth over the viewing experience. The technology is not yet commercially available.

“It allows you to do things you cannot do with any other technology,” he says. The super slow motion project is an AI use case that’s “not about cost optimization, not about saving money. It’s about making something you cannot do without this technology.”

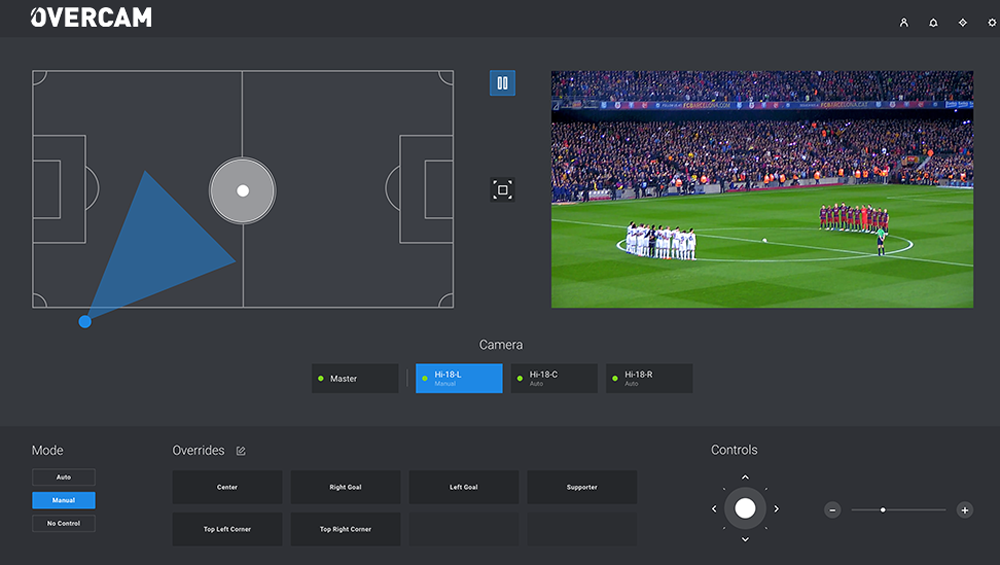

One of the other products EVS is working on does help with cost optimization. Barnich says Overcam makes it possible to have offside camera positions driven by AI and machine learning. As a result, these Overcams don’t need camera operators for soccer games.

After analyzing videos produced by human offside camera operators, EVS used the information to understand the best framing for two offside cameras that could be operated by AI. EVS has about a dozen user acceptance tests from Europe and plans to have the product ready by the NAB Show for soccer applications. Overcam for other sports is expected to follow, he says.

Autoclipping And Metadata

Over at Avid, there are several ways the company is leveraging AI and machine learning technologies, with the first being in streamlining the process of creating news and sports highlights that get pushed out over multiple platforms.

Ray Thompson, director of market solutions, broadcast and media at Avid, says autoclipping of incoming video streams can create highlights based on scene recognition or speech to text and then users can set parameters in MediaCentral Publisher settings to distribute them across platforms.

The nice thing about that, he says, is with machine learning, the system retains the information and once it recognizes a person and learns what to do with frames that include that person, “it looks for and finds that person if they’re the topic of the story,” Thompson says.

Avid had planned to show the autoclipping technology available through Media Central Publishing at the NAB Show before it was postponed due to coronavirus concerns. Avid said Tuesday that its new product introductions will now take place online in April.

Another area of focus that’s gained a lot of traction in the industry is metadata. AI and machine learning can automate tagging of historical content in libraries. AI can use “scene and facial recognition to enhance those clips and make the libraries more searchable and the content accessible,” Thompson says.

It’s possible to put an entire library of content into the cloud and let AI do the work.

“Once it’s done, you have a rich library full of metadata,” Thompson says. “The more you can do to automate, the better it’s going to be. Then you can use and monetize that content.”

And when it comes to monetization through ad insertion, AI can help create more personalized experiences for viewers on different platforms.

“You get ads pertinent to you as opposed to what you get on television,” which are targeted at a broad swatch of demographics, Thompson says. Media Central Publisher makes it possible for broadcasters to leverage data to drive revenue through dynamic ad insertion programs, he adds.

At the same time, programmatic ad models are developing. “That’s an area where we see growth,” Thompson says.

There are still a number of points in the workflow to apply AI and machine learning, Thompson notes.

“Any process that exists today is a candidate for AI and machine learning,” he says.

For example, during the color correction process, a colorist will check every frame and manually adjust frames that need to be lighter or darker.

“This is an ideal candidate for being automated,” Thompson says. “There are all kinds of areas that can be automated that are manual and somewhat mundane to allow creators to focus on the creative part.”

Speeding Up Live Research

AI is already being used to improve the speed of live research, says Andreas Pongratz, X.News CEO. The product, conceptr, for conceptual research, has been in beta with a global customer. Pongratz expects it to become fully available by IBC 2020 in September.

X.News developed conceptr to “address the pains” of the company’s current offering, the x.news dashboard, which requires a certain level of maintenance to be useful for the reporter. “We’re making the efficiency and administration better and easier,” he says.

In terms of research, conceptr automatically collects entire articles that are behind tweets and makes those articles fully searchable. The user can rate specific sources with a thumbs up or down and can rank sources against each other.

At the click of another button, conceptr “extracts the concept of the article” to give them the relevant high-rated sources and articles first, Pongratz says.

If a reporter is interested in the coronavirus, which sources all around the world are covering, “whatever is rated higher and trending will come up” as that reporter’s personal recommended topic, he says.

Real-Time Content Indexing

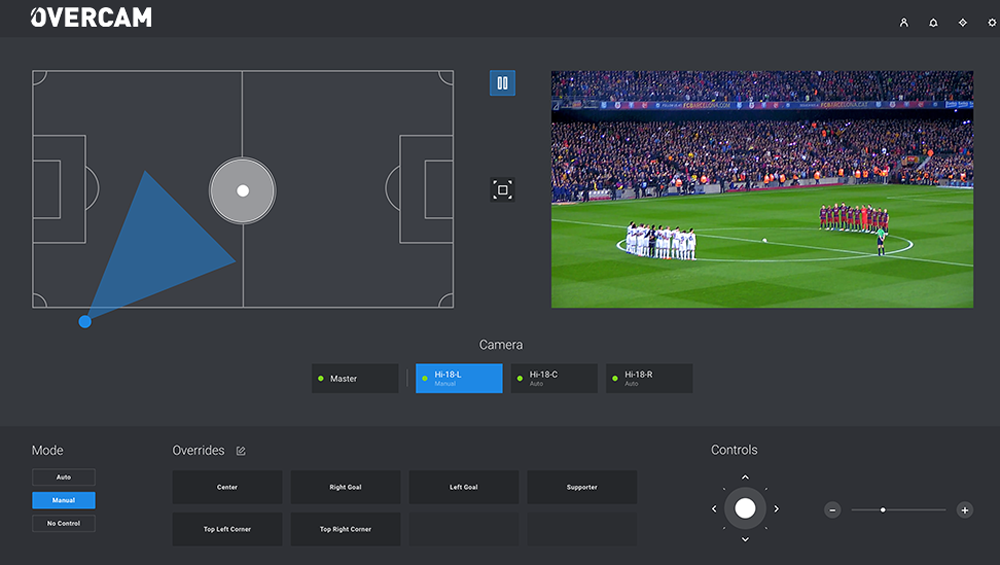

Another way AI helps reporters is through indexing content and making the details searchable. For the longest time, says TVU Networks CEO Paul Shen, having each frame of a video fully indexed and searchable “was only a dream.”

TVU MediaMind allows live video feeds to be automatically filtered based on user preference and clipped according to face or text.

Now, though, an AI-assisted workflow can handle real-time indexing of content and make it “discoverable to the precise frame,” he says.

TVU has built the foundation for this technology, which is in beta, and planned to show the real-time element of the workflow at the NAB Show, but since pulling out of the gathering earlier this week, says it is “exploring with the NAB alternative ways to share the news and demonstrations we planned for the April show.”

Shen says the technology can save a lot of time. One customer decreased the time necessary for creating a 10-second clip from an hour to about five minutes, he says, and the goal is to make it even more efficient.

AI is going “to make the production process much more efficient,” Shen says. “AI is not going to replace people.”

But he does believe AI will cause “news media behavior to change, especially for news and sports.”

“Fundamental changes” are likely along the entire media supply chain, including how the content is acquired and presented, he says. When broadcasters realize “how it will change their business,” they’ll realize that “if they don’t adopt this, they will die.”

Comments (0)