Automated Captioning Rises To The Cloud

Artificial intelligence and machine learning are driving improvements in accuracy and decreasing latency of automated captioning for broadcast content.

The technologies supporting automated captioning have improved significantly over the last few years while dropping in costs, making it possible for broadcasters to more economically caption their content than relying on human captioners alone. The trend toward using hybrid and cloud models rather than only on-prem for the captioning portion of the workflow continues.

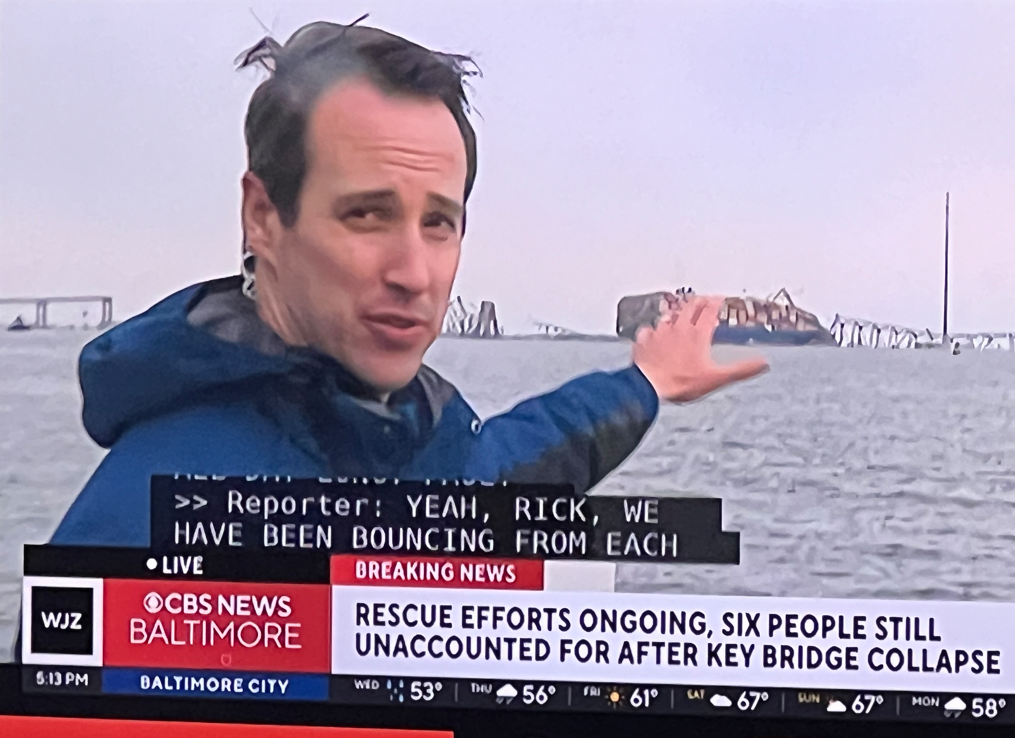

Vendors say the benefits of using automated captioning and transcription services extends beyond meeting regulatory requirements and makes it easier for people in noisy environments, such as bars, to enjoy content, because it makes content searchable and therefore more useful to the broadcaster and prepares it for translation or translates it verbatim on the fly with minimal latency.

Improving technologies have managed to overcome some of the issues that made accurate and fast automated captioning difficult.

“Auto-transcription, auto-translation and natural language processing are the main tools we have today, and they are helping us in a big way,” says Sana Afsar, engineering manager at Interra Systems.

In the last few years, these technologies have improved dramatically while becoming more affordable, she says. “Languages are evolving, and machines are getting better and better, but they are not 100% accurate.”

Things like background noises, dialect and slang can lower accuracy, as can switching languages in mid-speech, which might happen when words are adopted from one language into another, Afsar says.

Even with these challenges, the company’s Baton Captions automated captioning still works well, and machine-assisted review processes can flag sections of the captioning where confidence is low in the accuracy, she says.

Matt McEwen, VP of product management for TVU Networks, says accuracy of speech to text can depend on many factors.

“AI [artificial intelligence] engines are getting better at detecting accents and different languages,” he says. “They’re learning engines, getting better and better. It’s amazing to me in the time we’ve been doing this, how much it’s improved.”

TVU Transcriber is an independent microservice that can be added to any TVU Networks product, McEwen says. It is available in the cloud on a pay-by-the-minute model, although the module started out on-prem in a TVU hardware box, he says. TVU Transcriber can automatically detect if a stream has captions, then add captioning when they are not present, he says.

“Some customers use us just for captioning,” he says.

Background noise can be a factor, so ENCO’s enCaption tool focuses on the vocal range and ignores sounds outside the vocal range, Bill Bennett, media solutions and accounts manager for ENCO, says. “It’s all about accuracy. But it’s also all about speed. They’re intertwined,” he says.

Bennet calls enCaption a solution that is cost effective and as accurate as humans in traditional live situation and faster than humans for file-based captioning. “We have been honing the technology for almost two decades to get it to a sweet spot where both live and file-based workflows are handled by a single product,” he says.

EnCaption started out on-prem, but ENCO has been evolving to meet the move toward hybrid and cloud workflows, Bennett says.

“We’re growing a native cloud version of our enCaption product,” he says. “It’s completely being rebuilt from the ground up.”

Voice Interaction’s Audimus.Media uses deep neural networks and machine learning algorithms along with a large amount of data to be able transcribe speech to text automatically, Voice Interaction CEO João P. Neto says.

Audimus.Media can produce live English-to-Spanish translation.

Transcribing content creates time stamps of certain words in a video, making it easier to search and index the content, Neto says.

“We are getting very accurate results, which is much better than a manual operator” who might have more errors, deletions and dropped words or sections of content, he says.

Latency still persists, however. He says even with a fast engine that transcribes from speech to text, latency can be necessary as the engine waits to gather enough context to determine what the speaker said to provide an accurate transcription, he says. Low latency, he says, is two to three seconds between spoken words and the output of the words on closed captioning.

Russell Vijayan, Digital Nirvana’s director of product, says a number of different technologies, like natural language processing algorithms and speech to text, are the foundation of captioning tools.

“All the technologies combined is what helps broadcasters produce quality captions,” he says.

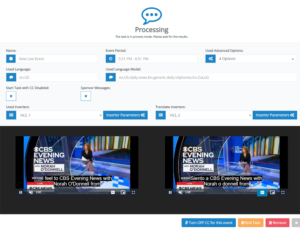

User interface for Digital Nirvana’s Trance captioning software.

Ed Hauber, director of business development at Digital Nirvana, says, “The machine-generated process can produce a highly accurate transcript, meaning 90% or greater in terms of accuracy.”

Incorporating quality automated speech to text technology helps broadcasters in two fundamental ways — by reducing costs and time, he adds.

Vijayan says using a hybrid or cloud-based system for automated captioning provides the ability to update dictionaries when names or words — such as COVID Omicron — are not spelled properly but says an on-prem only system cannot be updated in the same way.

“The first time, it will get Omicron wrong, but the cloud communicates to the system on-prem, so next time, on the fly, it will give you an accurate spelling,” he says.

Digital Nirvana’s Trance is a web-enabled enterprise-class tool that Hauber calls a “DIY” self-service captioning tool

“It enables operators to do the work themselves. They have the staff and workflow to produce the media,” he says.

Caption Services is a turnkey version of Trance that delivers the captioned content back to the client.

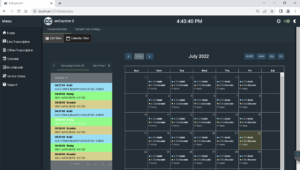

ENCO’s enCaption5 HTML5 Web browser interface showing in-browser editing and viewing of automation schedule for Word Model loading, and encoder and speech engine control.

Matt Mello, technical sales manager at EEG, an Ai Media company, says that human captioning will remain an option but that the technology behind automatic captioning is getting better all the time and costs are going down for it.

Ai Media’s Lexi is an automated closed captioning system, while Smart Lexi adds human intervention.

“With Lexi, you can also add custom words, phrases, people’s names, teach it what it should be listening for,” Mello says.

Mello anticipates that broadcasters will need to caption all OTT content, saying regulations in place now are “somewhat vague” in how they related to closed captioning for OTT.

“Broadcasters are starting to add closed captioning into workflows that are different to what they do live,” he says.

And that means more demand for closed captioning.

Bennett says that captions do more than help broadcasters meet FCC regulations.

“When captions exist for video, they can find the nugget they are looking for directly in that video,” making it searchable, he says.

That helps viewers as well as producers and editors, he says.

Additionally, he says, captioning does more than serve the audience of hard of hearing and deaf people. It’s useful in noisy environments or where the video may be muted

“If you don’t caption your content, you miss getting in touch with your audience,” Bennett says.

And transcribed is just a short step away from translated.

In early July, ENCO bought TranslateTV as part of its drive toward real-time translation between multiple languages

“It’s a form of universal translator. You feed in English words and get English text and Spanish text and ultimately the other way around as well, and far more languages in the future,” he says. “We’re a multi-language, multinational world now.”

Comments (0)