TVN Tech | OTT Monitoring Faces Complex Challenges

Buffering and jumpy video can send OTT viewers from one piece of content to another, so vendors are improving ways for broadcasters to monitor quality, diagnose problems and automatically repair issues.

Broadcasters are creating more video content than ever for distribution, whether it’s delivered over the air — a method the broadcast industry has long experience in — or over the top, where there are still some growing pains.

A poor user experience can prompt a viewer to abandon content or the app altogether, and dropped ad frames can result in lost revenue. This means it’s imperative for broadcasters to monitor their OTT output. But monitoring the quality of service and quality of experience for OTT viewers is more complicated than for traditional OTA distribution because there are so many more variables in OTT distribution.

Vendors are working to uncomplicate the monitoring process, with one offering an agile commercial model intended to save broadcasters on licensing fees for its software.

Others are offering technologies like monitoring by exception, the use of analytics, real-time distribution alerts, unidirectional transmission and artificial intelligence combined with machine learning. Capabilities like event-based monitoring, data correlation and real-time repair are expected to be unveiled at April’s NAB Show or later this year.

Over time, it’s likely the overall reliability of OTT will merge with the reliability of OTA to deliver an equal quality of experience to viewers no matter the platform.

Improving OTT’s UX

For the time being, the industry is fighting to improve the overall user experience (UX) so OTT viewers don’t have to suffer through buffering problems, poor synchronization between audio and video or between audio and closed captioning.

“If viewers are not happy with the viewing experience, it’s easy to switch,” says Anupama Anantharaman, VP of product management at Interra Systems. Any monitoring tool a broadcaster chooses to use should be able to monitor a variety of performance categories, she says.

One thing that complicates the monitoring process, says Miguel Serrano, VP of cloud at Haivision, is that the measures of quality in the IP world are different from the OTA world. For OTT, “you want to measure things that never happen over the air,” he says.

Such variables include how much buffering the viewer suffers, how many frames are dropped and how many seconds elapse between clicking play and being served the content, Serrano says.

Mediaproxy CEO Erik Otto says part of what makes monitoring OTT quality difficult is the fact that “OTT in general came up very quickly.” In short, he says, broadcasters went from the world of multicast, or one to many, to unicast, or one to one. To do that, they had to “shoehorn” their signal into the “civilian world of the internet,” Otto says.

As Otto puts it, “broadcasters weren’t ready for it. They didn’t want to plug their trusted SDI cable into a world they didn’t understand” and that wasn’t as reliable as an OTA signal. “One thing the internet really isn’t is reliable.”

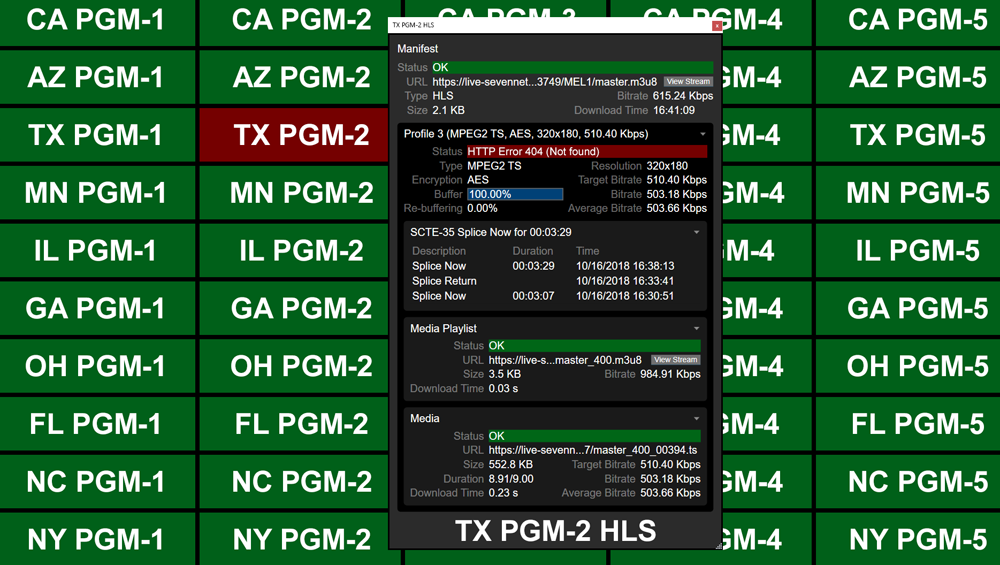

Mediaproxy OTT MCR Monitoring: Mediaproxy’s monitoring service keeps track of multiple streams of content. (Source: Mediaproxy)

This is a critical concern, Otto says, because broadcasters can’t afford “to lose one frame” of an ad because advertisers will seek make-goods.

John Shoemaker, Qligent’s director of sales, says some of the weak points in transmission will be related to congestion of the available bandwidth, as well as how many hops the content has to take to get from the broadcaster to the end user.

Shoemaker says the diversity of distribution platforms and pathways makes the OTT world both ubiquitous and akin to “the Wild West.”

He says there are “multiple points of entry to get into it, and multiple points to deliver. OTT is designed to go everywhere, to everyone if needed, in any place across the world.” The very nature of OTT, he says, “makes it complicated to monitor and ensure quality of service to the end user.”

Further complicating the monitoring of quality of experience is the fact that two people could be side by side watching the same content on two devices and one experiences quality issues while the other doesn’t.

All of that complexity requires monitoring tools that are agile and can evolve and change on the fly, says Kevin Joyce, chief commercial officer at TAG Video Systems.

Finding Efficient Solutions

“The industry is truly starting to think digitally,” which will help it become more efficient with its hardware and software, Joyce says.

Haivision’s LightFlow offering can detect in real time if a CDN is working reliably. (Source: Haivision)

For example, he says, one national broadcaster is buying a bank of OTT probing and monitoring licenses and will use them across multiple locations for OTT as well as live production monitoring. When a license isn’t needed for live production, it becomes available in a bank in the cloud, and someone else can use the license for OTT monitoring, he says.

“In the past, people bought excess capacity,” and most equipment ran at 30% utilization rates, Joyce says. “The future is about maximizing assets.” TAG is moving toward more “transactional consumption,” he adds.

Another way broadcasters can save money on the monitoring front is through the emerging trend of monitoring by exception.

For a broadcaster who sends content from 10 channels to the internet, each piece of content will require six or more profiles, or renderings of the same content, to meet different device and resolution requirements. Instead of just having to monitor those 10 channels, it’s necessary to monitor 60 or more channels because of the different profiles of each piece of content. That is well beyond what a human is capable of monitoring efficiently, says Mediaproxy’s Otto.

“We can’t monitor everything the traditional way,” Otto says. “We have to accept that everything is good and that when things go wrong, then we get alerted.”

Mediaproxy has built a workflow to allow broadcasters to monitor OTT quality in the multiviewer environment, but the company has started to make it interactive so it can be used as a tool to quickly chase down incidents.

Analytics goes hand-in-hand with data gathering, and Qligent uses analytics to correlate quality of service and quality of experience data across different distribution methodologies and devices.

Shoemaker says analytics provide “a bigger picture of your entire distribution methodology and model.” They can help ferret out why two viewers in the same house watching the same content on their individual devices might have different experiences, he says.

Qligent Vision is the company’s base product for traditional quality monitoring, and the addition of Vision Analytics provides those details for OTT services.

Another service is Interra’s Orion Central Manager, which will be promoted at NAB 2020. It is an end-to-end monitoring system that provides real-time updates for streamed and linear content, Anantharaman says. The web-based service monitors the health of probes, collects monitoring data from probes and provides alert summaries across probes.

“We can show how the videos are traversing through the workflow” to see where problems are occurring, she says.

Some of those problems occur at the consumption edge, and this is where Vitec focuses its efforts. Vitec makes sure all transmitted packets have arrived in good order. Internet transport protocols like HTTP Live Streaming (HLS), which uses the Transmission Control Protocol (TCP) and MPEG-DASH are inefficient for OTT needs, says Kevin Ancelin, Vitec’s VP of global sales for broadcast.

Vitec uses Reliable Internet Stream Transport (RIST), Secure Reliable Transport (SRT) and Zixi for its unidirectional transmission approach, which retransmits only packets that didn’t arrive. This approach, Ancelin says, is akin to dedicating one lane of a road for normal traffic, which is the original transmission, and a second lane for sporadic traffic, which are the retransmitted packets.

“They can move at much higher bit rate with lower latency,” Ancelin says.

At Haivision, machine learning and artificial intelligence are being used to optimize video quality and multi-CDN delivery.

Serrano says LightFlow, which Haivision acquired last year, can detect in real time if a CDN is working reliably. If a region is having a problem with the CDN, LightFlow detects it and switches to a different CDN.

In The Works

Later this year, some vendors will roll out new or updated quality of service and quality of experience monitoring tools.

Qligent is working on event-based, or dynamic, monitoring. Currently, probing OTT streams results in a round-the-clock charge, even if it’s needed for only three or four hours a day, Shoemaker says. “You’d only pay for service in the cloud during the time you’re using it,” he says.

Qligent vision analytics Big Data: Qligent Vision Analytics shows Key Performance Indicators (KPI’s) on a real-time dashboard. (Source: Qligent)

TAG is working with machine learning and artificial intelligence to determine where errors have appeared in a channel path over time in a bid to forecast future errors. At NAB, Joyce says TAG will show data correlation. With such a capability, Joyce says, “I think we will move away from, ‘Oh, we have an error,’ to ‘I think you might potentially have a problem here. Go take a look at it.’ ”

The sheer complexity of creating and transmitting content can cause problems with quality of service and quality of experience.

“From the time a video is created to the time it’s distributed, there are so many systems involved,” Anantharaman says. “Tools tell you where the problem is, but that’s not enough.”

The ideal, she says, is for repairs to be automatic. For example, if the monitoring tool detects that a specific encoder is problematic, the tool would communicate with the encoder management system via an API and tell it to move to a standby encoder.

“That is something our customers are looking for,” Anantharaman says.

Haivision’s Serrano says that while metrics of quality are available, they do little to address actual problems.

Serrano says it’s important to know the quality of the audiences’ experience and what broadcasters can do to improve it.

Real-time changes in the workflow improve the quality right away, he says. It comes down to “what you can do in real time so you help your users.”

Over time, it is likely that the overall reliability of OTT will grow to parallel that of OTA, so both deliver an equal quality of experience to viewers.

“The two worlds are converging,” Serrano says.

Comments (0)