Vendors Tackle Task Of Measuring UHD, OTT

Test and measurement vendors recognize that UltraHD (4K/high dynamic range) and OTT distribution via the broadband and mobile networks will be a big part of television’s future and so are developing products to keep pace.

It’s challenging work, says Phillip Adams, managing director at Phabrix, which makes portable and rackmount systems to perform fault diagnosis and monitor compliance. The move from standard HD to UltraHD increases the data payload by a factor of eight, he says.

“That’s a big change. A lot of equipment changes in that process. Now all equipment is being designed with 12-gigabit per second interfaces,” and a new set of instruments is necessary to analyze and create the 12-gigabit per second signals.”

Phabrix’s Qx series includes instruments for rapid fault diagnosis, compliance monitoring and product development for the next generation of video formats.

Much of that increase stems from the dynamic range, the difference between the brightest and darkest pixels. The wider the range, the greater the contrast and the more natural the pictures.

“When the sun comes up, the sun would blind you,” Adam says. “That never happens on television, but, in the future, the televisions that are coming will have much higher brightness…. You may have to look away.”

“It’s a change in physics, in the way you make the cameras and capture the images,” Adams says. “We need new instruments to understand what is happing with the high-dynamic range.”

Phabrix’s Qx12G 4K/UHD generator, analyzer and monitoring solution handles both quality control and compliance testing for 4K.

The Qx’s HDR and Wide Color Gamut (WCG) toolset has instruments that enhance the visualization and analysis of 4K and HD-SDI content to speed workflows, according to the 4K, UltraHDcompany. The HDR/WCG tools include a signal generator, CIE chart, luminance heat-map, vectorscope and waveform.

With the newer light-emitting diode (LED) and organic light-emitting diode (OLED) television sets, HDR will also make it possible to see a lot more detail in low light conditions, says Charlie Dunn, general manager of Tektronix’s video product line.

And, he says, the HDR workflow requires new equipment, including the cameras, light meters, monitors, editing software, not to mention the systems to monitor the broadcast signals.

Tektronix’s PRISM media monitoring and analysis platform provides measurements and displays that show network performance and problems and reduce the time to fault isolation and remedy, he says.

The vendors are also racing to meet demand test and measurement of OTT.

According to Erik Otto, Mediaproxy CEO, reining in the scale and complexity of OTT monitoring is a priority for broadcasters, and it’s necessary that traditional technology evolve to monitor the streams efficiently.

“Developing tools that are familiar to engineers but translate to the structure of OTT streams will make the transition easier,” Otto says.

The growing demand of mobile has put broadcasters in an uncomfortable spot, Otto says. Media companies are “sending their precious content off into the nebulous world of the internet,” he says. “It goes against everything they’ve ever done.”

The broadcasters have long dealt with terrestrial transmitters and satellites. The transmitter is “their comfort zone,” which allows them to get a return feed, which is an easy way to check the signal, Otto says.

The traditional ways of monitoring the OTT signal are not possible because the streams are in different formats, he said. “It’s not broadcast grade. It’s going to civilian devices. The tooling has to change.”

The best way to monitor internet streams is to be closest to the source where the content is being packaged, which means a data center, Otto says. That will minimize variables in the path, giving a truer indication about the quality, he says.

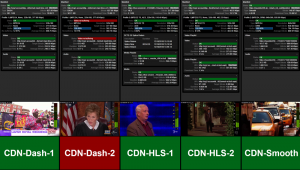

Mediaproxy’s new interactive real-time monitoring interface for live HLS, DASH and SmoothStreaming (OTT) streams.

Mediaproxy installs its LogServer software where low resource intensive packet-level analysis and real-time monitoring is performed in the cloud. Monitoring is done by exception, sending detected events to the customer’s monitoring facility to visualize the state of the streams in real time, Otto says.

Placing the LogServer in the cloud nearest to the CDN reduces bandwidth consumption and costs by not having to send payloads back to master control, Otto says.

Linking LogServer on-premises monitoring of outgoing playout streams to cloud-based LogServer instances monitoring and analyzing CDN edge streams, allows for unified visibility of all streams using Mediaproxy’s Monwall multiviewer, Otto says. Operators and engineers receive analysis data for all streams without having to backhaul streams from the remote sites, he adds.

Otto says LogServer is also useful for situations where an operator or broadcaster may want to launch one or more temporary OTT channels for special sporting events. The software captures and monitors the services, so the entire workflow, including compliance and logging, can be scaled with available OTT services, Otto says.

With LogServer, he says, Mediaproxy is able to support new standards, including encoding profiles, media over IP formats, and DRMs as they take hold. “The agility and flexibility of LogServer means that OTT service operators can stay one step ahead of market trends and introduce innovative revenue generating services.”

Otto said Mediaproxy’s user interfaces are comfortably familiar for broadcasters. “It looks the same. It just has different data.”

Dunn says OTT has created an enormous number of new signals that must be monitored. Cloud monitoring probes ensure quality is good and provide a quick means of finding problems. Because of the sheer volume of signals, this monitoring must be done automatically via artificial intelligence.

Tektronix offers a computerized means of assessing the subjective picture quality, a value commonly stated as Mean Opinion Score (MOS). TekMOS automatically ranks a picture on a scale of one to five, with five the best and one being unwatchable.

Picture quality is typically evaluated either using full reference, that is, with two sources, or no-reference. Full reference measurements require two video sources with frames aligned temporally and spatially, and can be difficult to do in real time on live material.

A no-reference measurement does not require temporal or spatial alignment and can operate on multiple image quality scales or formats. It can be potentially difficult to correlate the computed score with a viewer’s subjective score.

Further, quality can be subjective — the human viewer’s perception of quality — or objective, which attempts to calculate a score that closely correlates to subjective opinions.

Harnessing the power of machine learning, the TekMOS no-reference model technology emulates what a person does to determine if a picture is good or bad. According to Dunn, TekMOS can work on file-based content or live signals equally well. The system uses a support vector machine (SVN) for classification and regression analysis.

The TekMOS can be tuned for future forms of compression, Dunn adds. “It works the same way my eye would look at a picture and say it’s good or bad. It does that without any knowledge of what it looked like before the compression. Many other systems require before and after.”

Comments (0)