NewsTECHForum | News Orgs Set Goals For Election Coverage

NEW YORK — Kicking off the 2019 iteration of TVNewsCheck’s annual NewsTECHForum at the New York Hilton Monday was a panel discussion pointedly titled: Technology and 2020 Election Coverage. Most of the initial talk, however, took a decidedly analog bend.

When asked by moderator Michael Depp, editor of TVNewsCheck, how news organizations can better grasp what voters are concerned about during the campaign season — after failing to do so four years ago — one panel member summed up the collective’s response best:

“Put more boots on the ground,” said Brian Scanlon, the Associated Press’s global director for U.S. election services.

Scanlon added that the extra reporting manpower should also be deployed to areas that have historically been proven keys to victory in presidential elections.

“If you looked at newsrooms, they were so Washington-driven [and] if you would have told people in 2016, ‘You need to put a lot more resources in Wisconsin’ or ‘You need to put a lot more resources in Michigan,’ they would’ve said, ‘Yeah, maybe.’ ”

Barb Maushard, Hearst Television’s SVP of news, agreed, saying that news organizations need to focus on “what people are talking about, what will cause them to make the decisions they’re going to make, so that we can give them as much information as we possibly can.”

For Hearst’s part, Maushard said her organization will employ a new coverage strategy in 2020, sending reporters to all 50 states.

To stem the tide of misinformation in the ether, and ward off attacks on the integrity of news outlets from certain combative candidates and their supporters, Maushard said that report-worthy information should be given white glove treatment.

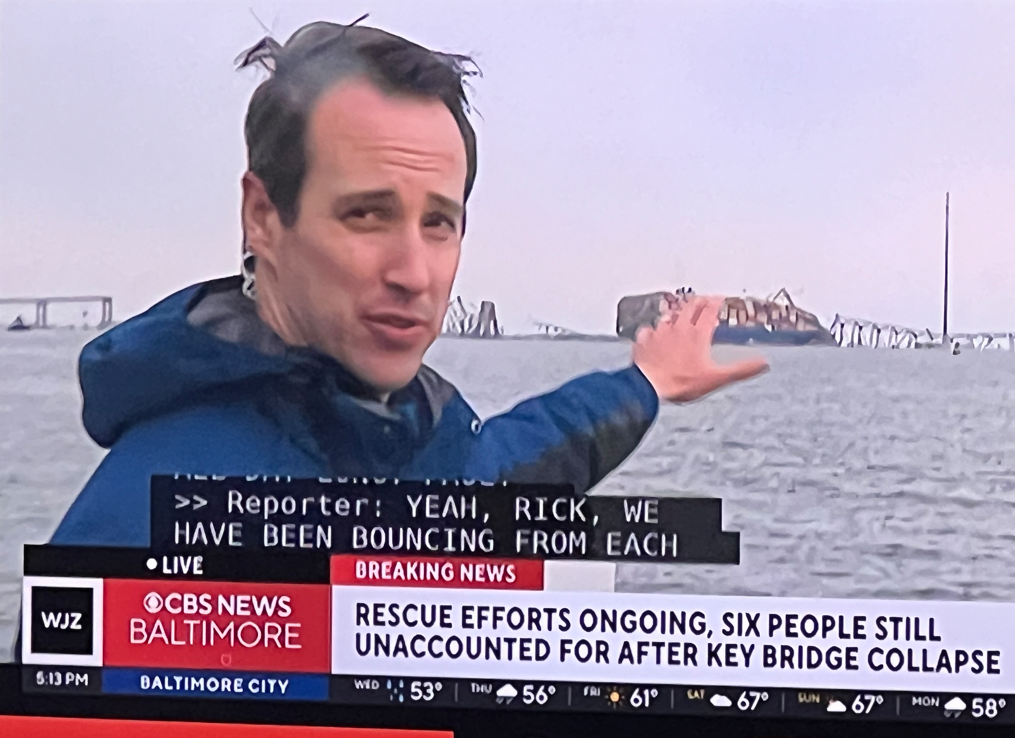

“We have to stay true to what those [good journalism] principles are,” Maushard said. “We have to be true to the facts, we have to be clear, and we have to be incredibly careful about the language we choose, the words we put on the screen to describe what’s happening.”

She observed that scrolling banners along the bottom of the screen could easily “send a message you don’t intend to send,” so nothing can be taken for granted.

Shifting gears into the tech side of things, Depp asked the panel about the role social media plays in reporting, and if outlets are concerned about its influence over the public as it increasingly scrutinizes news’ reliability.

Scanlon of the AP recounted an instance where one of his outlet’s reporters in Kentucky had tweeted that the AP had called a local election’s results. It hadn’t.

“It spread like wildfire,” Scanlon said of the tweeted report. “The next thing you know it’s picked up by one of the major cable news networks.”

Scanlon said that in this “hyper-reactive world,” where a single tweet can send shockwaves across the news landscape, reporters and outlets as a whole should “take an extra second to really verify what they’re doing.”

He added with a chuckle: “I think every organization looks at it as a challenge, and says: ‘We’ve got to make sure [our people] don’t do something stupid.’ ”

Therefore, news outlets constantly need to reassess their standards and procedures, especially as social media technology evolves.

And as news production technology advances, so does the technology at the hands of those seeking to disrupt the fourth estate’s reliability.

For now, deep fake technology — as convincing as some of the videos may look — is in its infancy. But it’s only going to get better, and, fortunately, tech companies like Microsoft are on the frontlines, building diagnostic tools that can better identify authentic versus phony videos.

For years, Microsoft has been able to look at altered digital photographs and identify metadata code that varies from the original. The same type of process is being employed now with deep fake videos.

“There is some level of editing that goes on [in deep fake videos],” said Jamie Burgess, Microsoft News Labs’ AI innovation lead. “Color treatment, saturation, all these kind of things that will alter the [code] of that video.… We’re looking at ways we can go all the way back to the camera, to generate a certificate from the camera — this was shot in this place, at this time, by this person, by this organization.”

Once the original has been verified it can be compared to suspected fakes, which, when discovered, can be discarded or, if it they’d already been posted somewhere, removed.

In guarding against content tampering, Scanlon said the AP hires independent companies that purposefully try to hack into the organization’s networks to see where holes in protective infrastructure might exist.

All these steps and others can help preserve the integrity of reporting, ensure that consumers are getting correct information, and make claims of “fake news” be rendered as, well, fake news.

But is all this enough to keep sabotage at bay?

“Unfortunately, there’s no surefire way to stop it,” said Cameryn Beck, senior director of content strategy at the E.W. Scripps Co. “There’s a human element in it that we need to be reminded of on a daily basis. So I think we need to continue to be a bit cynical. We need to continue to be asking questions, again, taking a moment to be thoughtful and looking at what’s out there.”

Watch the full video of the session here.

Comments (0)