Verance Introduces Watermarking System In Advance Of 2024 Elections To Digitally Authenticate News Sources

Verance has introduced a watermarking system to digitally authenticate and track trusted news sources for video in advance of the 2024 elections.

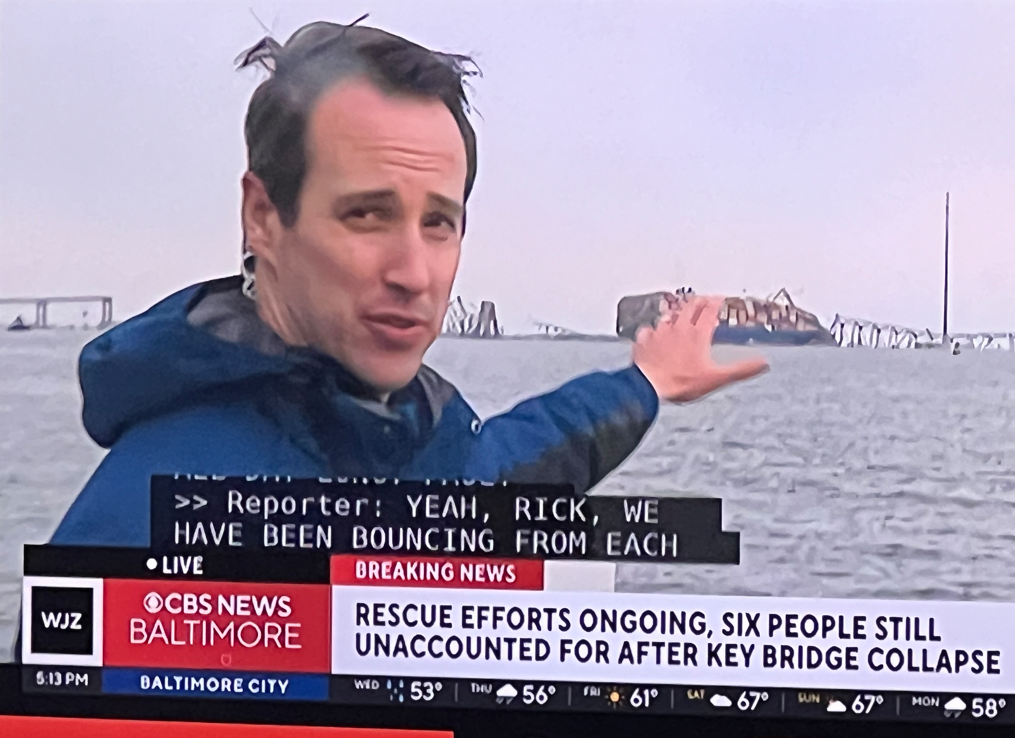

The “first-to-market system” arrives amid stepped up online vigilance by major news organizations, including CBS News, which recently launched a new fact-checking unit for AI to combat misinformation from deep fakes.

With presidential primary elections starting in January, Verance says it is now in advanced talks with TV and digital news operations, as well as consumer electronics giants, about forming partnerships for the trusted news source watermarking system which, after detecting the embedded Verance watermarks, allows viewers to determine the authenticity and accuracy of the information provided. This includes whether the media has been tampered with or modified during distribution, as well as providing them with the ability to access fact checking sources relevant to the news content.

The San Diego-based company has decided to also make the system immediately available to a wide variety of critical information sectors, including political campaigns and other corporate and governmental organizations. They would work in partnership with makers of TVs and consumer devices to protect their audience — and their reputations — against the increasing risks of online misinformation, misrepresentation and distortion.

Recent public and private sector safeguarding actions reflect a growing sense of public uneasiness around the new technology, with a recent Axios-Morning Consult poll showing more than half of Americans expect misinformation spread by AI to impact who wins next year’s elections.

Verance, whose watermarking systems have been standardized over the past nearly 30 years, and are deployed in more than 500 million TVs and consumer devices worldwide, says it has also been consulting with senior members of Congress and government officials on watermarking and policy.

Nil Shah, Verance CEO, said: “It is important to enlarge the body of authenticated news content to reduce the influence of content that could be fake, especially in a hotly contested election year. Without universally trusted authenticity tools in this new era, the internet’s societal benefit as a means of accessing knowledge will precipitously decline. This presents a serious problem not only for large technology platforms such as Facebook and YouTube but also traditional news media that increasingly rely on the internet for sourcing and verification.”

Its latest watermark follows work to develop and deliver media management technologies that address threats posed by rapidly accelerating harms from advances in the availability and capabilities of AI at generating text, speech, images and video indistinguishable from natural content.

The system is in line with President Biden’s recent Executive Order building on voluntary commitments already made by technology companies. The order requires the industry to develop a number of safety and security standards before AI products can be introduced to the public, while giving federal agencies an extensive to-do list to oversee the deployment of AI.

The Commerce Department will now issue guidance to label and watermark AI-generated content to help differentiate between authentic interaction and those generated by software. The administration plan is to fully implement this executive order over the next 90 to 365 days, with the safety and security items facing the earliest deadlines.

White House Chief of Staff Bruce Reed told the Associated Press around the signing of the order that Biden “was as impressed and alarmed as anyone. He saw fake AI images of himself, of his dog. He saw how it can make bad poetry. And he’s seen and heard the incredible and terrifying technology of voice cloning, which can take three seconds of your voice and turn it into an entire fake conversation.”

The new Verance watermark “could have played a role in undoing a propaganda campaign following the outbreak of the Middle East war,” the company said. The New York Times reported how concerns about AI were used to cast doubt on the authenticity of a gruesome image of a charred body of a deceased child following Hamas’ Oct. 7 attack on Israel. The real image was quickly dismissed by some social media observers as an AI-generated deep fake before their theory was debunked — but by then doubts about its veracity were already widespread. Numerous other examples of online misinformation have cropped up since the outbreak of the war last month.

Comments (0)