For Captioning, AI Is Already Winning Against The Humans

Automatic speech recognition technology has improved so much in the last couple of years that it can now create better captions than humans.

Trained on large language models, the artificial intelligence (AI) engines used to generate closed captioning for broadcast content are now reaching 98% accuracy levels with about three seconds latency for live captioning. In addition to being able to create transcripts of content, these products also offer translation capabilities. Captioning vendors are also automating operations to streamline the workflow.

These changes couldn’t come fast enough as trio of factors stack up to challenge live captioning. Fewer humans are becoming qualified stenographers, broadcasters are creating vastly more content that must be captioned and regulations are expected soon that will require captioning on all broadcast video content, no matter how it is distributed.

“Live captioning has been in need of some serious disruption for a while, and a lot of it is workflow-related,” Chris Antunes, 3Play Media co-founder and co-CEO, says. Stenographers are highly skilled but an “aging demographic.”

On the other hand, he notes, automatic speech recognition capabilities improve all the time, so much so that 3Play creates a State of Automatic Speech Recognition report each year and uses the top-performer as the base for its services.

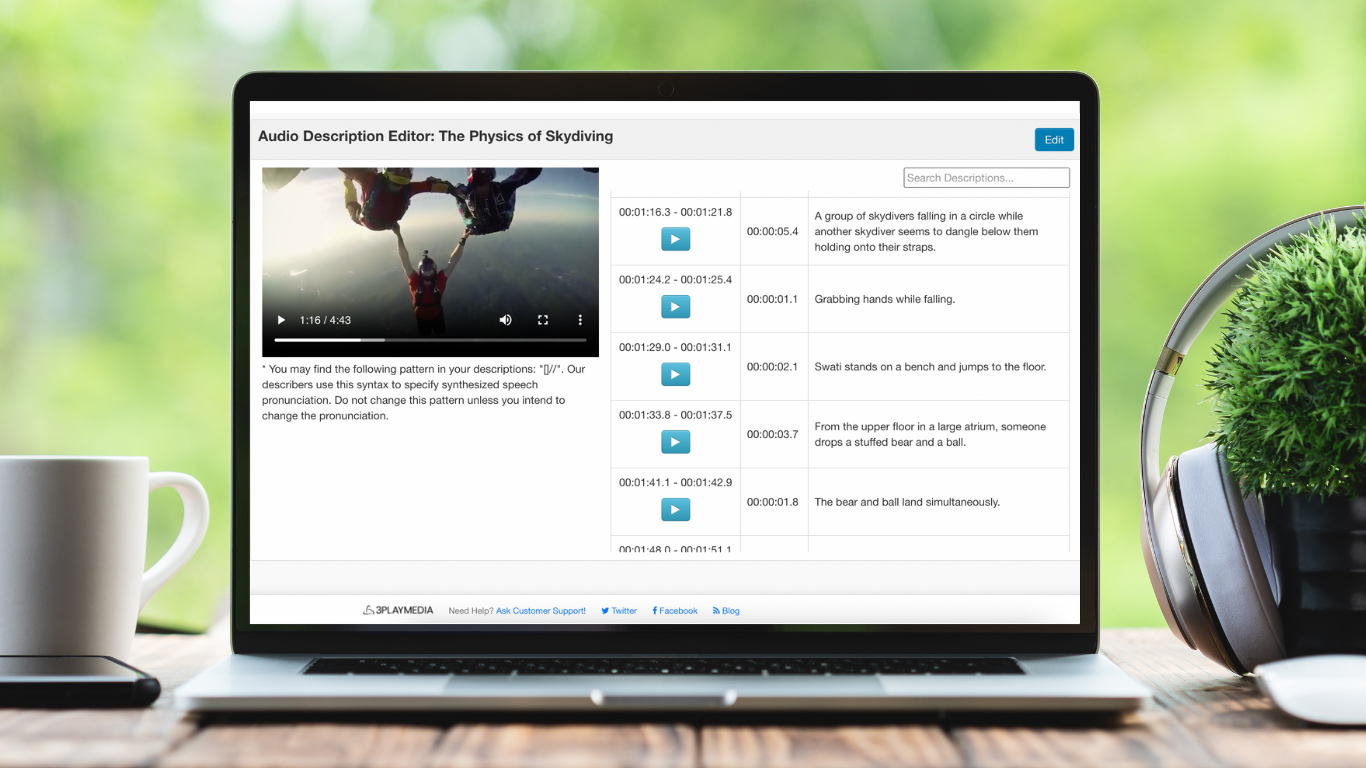

3Play Media’s Automatic Description Editor

More content than ever before is being created, and much of that content is not currently covered by captioning requirements. Regulators are poised to modernize captioning requirements, however, so that content would need to include captions and audio descriptions whether it went out over the air or over the top, Antunes adds.

“Audio description has been like a second-class citizen relative to captioning,” he says, noting audio description is more difficult to do because it must be given during gaps in the dialogue, requires a script that describes what’s happening, and needs human or synthesized narration. Broadcasters “have not had to do audio description in the way that they’ve had to do for closed captioning.”

Synthesized speech, although it raises “interesting questions legally,” could decrease the costs of audio description and would likely mean there is “a lot more described content,” Antunes says.

Russell Vijayan, director of business development, EMEA & APAC, at Digital Nirvana, says requirements and standards around captioning are evolving. In 2019, he says, broadcasters wouldn’t use speech to text for closed captioning, saying the quality was not good enough.

Quality of automated captioning has “exponentially” improved, he says. And it’s not just about the words, he says. Broadcasters have been looking to ensure the captions appear in a “more user-engaging way” such that they provide Netflix-like quality.

As a result, he says, more and more broadcasters are adopting automated captioning. “We are seeing big adoptions of the tools,” he says.

Vijayan says some customers using Digital Nirvana’s TranceIQ captioning services are using it to create transcripts for metadata purposes. Journalists, he says, are also using the service to generate transcripts.

Bill Bennett, media solutions and accounts manager at Enco, says, “The requirement for captions is growing exponentially. The only way to keep up is with automated captioning technology.”

Automatic speech recognition and AI-based speech to text captioning systems are improving by the day.

“They are quick and more accurate than I’ve seen a lot of humans do,” Bennett says. “Automatic speech recognition can’t send out typos, where humans can.”

Further, he says, stenographers sometimes can’t keep up with rapid-fire conversations so they resort to summaries.

“But the deaf and hard of hearing do not want the summary. They want every word. They want to decide themselves the meaning of the words,” he says.

Enco’s enTranslate in enCaption.

When content runs through speech recognition technology for captioning, transcription or translation, that information can become metadata that can be used for archival searching.

Bennett says a radio broadcaster has started using enCaption to grow its audience by delivering live captions to those who typically don’t consume radio content.

“They can view it as the interview takes place” and can submit questions and comments in real-time, he says.

During this year’s NAB Show, the company debuted Enco-GPT, which uses AI to mine transcripts and provide a summary of the content.

“The AI will tell you what that hour-long interview was mostly about,” he says.

Enco-GPT is currently built into the ChatGPT platform.

“We’re working on integrating it into several of our products, including enCaption, but it’s not yet on the market,” Bennett says.

TVU Networks CEO Paul Shen says technology for closed captioning has been “changing at a speed we haven’t seen before.”

The last five years, he says, has seen a “significant uptick” of closed captioning with AI. He says the technology is better than human captioning. Latency is low and the captions are synchronized with speech, he adds.

“That level of capability is there now,” he says, noting the performance is improving as the costs are coming down.

Live Now Fox uses captioning services from TVU Networks.

Shen says one of the ways TVU Networks is easing the closed captioning workflow is by running speech recognition during ingest of content. This process makes the transcript part of the metadata.

“Its’s much more than closed captioning. It makes content more findable,” he says. “If you don’t have metadata, you don’t have content, because you don’t know what you have.”

One of the significant improvements in automatic speech recognition is the new ability to handle contextual speech.

An example of contextual capabilities, says Matt Mello, sales manager for EEG/Ai-Media Technologies, is recognizing a string of 10 numbers as a telephone number and formatting it accordingly.

Contextual understanding of language has vastly improved AI-driven automated speech recognition systems.

“There’s been a shift in tone in how we talk about automatic captioning to our customers,” Mello says.

In the early stages of LEXI, Ai-Media’s captioning solution, the software was positioned as a “good backup,” available to pitch in if a stenographer failed to show or was behind, he says. LEXI 3.0 was released at NAB.

“With the newest version, that’s no longer the case. We’re phrasing it as, ‘This should be your primary captioning” method, he says.

Latency is “expected as part of live closed captioning,” Mello says.

Human captioners typically have three- to six-second lag time, while LEXI is consistently about three seconds, as those seconds are needed for contextual processing of the words, he says.

“Three seconds is the sweet spot between latency and accuracy,” he says.

Renato Cassaca, chief software development engineer at Voice Interaction, says latency is always a concern when it comes to captioning. “We need the captions to be very fast. We don’t want to introduce a delay.”

Part of minimizing that delay is optimizing software, he says.

In the world of automated captioning, Cassaca says there have been many improvements, with AI-driven captioning systems better than ever, and the systems can be trained on larger models.

“We are starting to see improvement in accuracy where we didn’t expect or where we weren’t able to improve before,” he says.

Cassaca says Voice Interaction is running a closed captioning pilot in Brazil with six stations to improve the workflow by bypassing encoders and sending the captions straight to the multiplexer. “We don’t rely on third-party hardware to put the captions on the final signal that the television stations will send,” he says, adding the cost savings of this approach is “relevant” to broadcasters.

The company has developed translation technology that runs alongside the transcription engine.

“We are able to deliver two streams of captions—the original spoken language and the other translated language,” Cassaca says.

As the technology evolves, says Ai-Media’s Mello, “People are starting to realize the benefits of automatic captioning over traditional captioning methods.”

Comments (1)

Insider says:

July 20, 2023 at 11:00 am

There goes one of the best sources of comedy while watching shows as humans make mistakes in real time. A hidden gem most miss. I always keep closed captioning on for that very reason.

For reasons that always escaped me, most pre-recorded reality shows always used humans doing closed captioning in real time, even though they were edited far in advance. Survivor and Amazing Race were two of the biggest. Plus they did it again live for every time zone feed which really made no sense, but lead to more opportunity of comedy.

One of my favorites was on the Amazing Race was when a contestant supposedly said “I can smell Phil” while looking for the pit stop location.

But only on the East Coast. It was correct on the West Coast feed