TVN Tech | AI Tools Bolster Case For Enabling Comments

While newspapers have long engaged with readers via online comments, broadcasters have been slow to follow suit, partially because exchanges devolved into cesspools. But emerging AI-powered moderation tools can help keep the conversation civil and may spur broadcasters to rethink enabling story comments on their owned and operated digital platforms.

And there’s a business case for permitting online story commenting on an owned platform: “There is high, non-abstract business value to developing an authentic engaged relationship with the community [media companies] serve through various feedback loops,” says Frank Mungeam, chief innovation officer for the Local Media Association.

“Those feed trust, which feeds brand, which can be leveraged in all the ways broadcasters monetize; and, conversely, without trust and brand equity, every one of those monetization efforts gets harder,” Mungeam says.

Just trusting people to be nice online won’t cut it, so automated moderation tools are necessary to keep toxic comments from ever seeing the light of day. While human moderators must still approve or deny some comments, ever-improving algorithms are helping minimize how much time they must spend on that task.

In the end, experts say, broadcasters who commit the time, money and technology to moderating comments on their own platforms will benefit not just from an engaged audience loyal to their brand but the opportunity for direct and indirect monetization of the comments section.

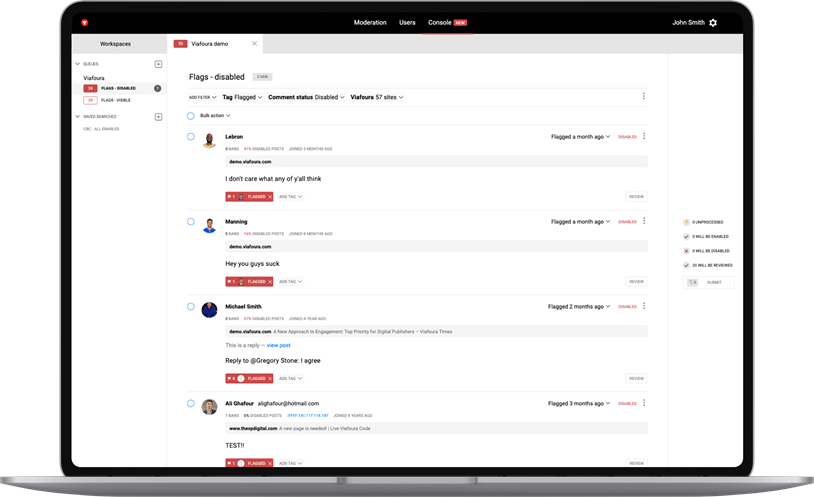

Viafoura’s moderator queue allows moderators to view shared queues and time-based banning through a single screen. This screen shows all the comments that members have flagged. The console can also show items that are “awaiting moderation” from the automated moderation algorithm, which captures up to 90% of incivility.

“I get discouraged when I see groups shutting down comments and sending people to Facebook or Twitter,” says Dustin Block, audience development lead at Graham Media Group. “That’s a big mistake and a step backward.”

But it can be understandable, Mungeam says, because of the reach and ease of use of social media platforms. “People are used to scrolling their feed and commenting in that.”

The Case For Loyalty Via Commenting

When they’re on social media platforms, they’re also used to “behaving in certain ways,” says Andrew Losowsky, head of Vox Media’s Coral, an open source commenting platform used by the Wall Street Journal and Washington Post, among others. A broadcaster can set the rules for the comments on their own platforms, he adds.

“This is different from social media. This is respectful. This is our space. If you want to yell at people, you have the whole rest of the internet for that,” Losowsky says.

But while comments sections can bring a host of benefits to the owners of the platforms they’re on, Losowsky says it appears TV stations are generally less focused on online audiences than their newspaper counterparts.

In the newspaper industry, there’s been a “greater move to growing loyal members” who may make use of an app, sign up for memberships or pay for content, he says.

A broadcaster that allows all discussion to take place on social media platforms is not only losing out on an engaged audience, Graham’s Block says, but essentially handing the reader’s lifetime value over to those other platforms.

“Local media needs to stop doing that. They need to build their own platform,” Block says.

Ignoring Comments Is ‘Ignoring Your Audience’

Leigh Adams, director of moderation at Viafoura, says comments on the site reflect the broadcaster’s brand. As such, broadcasters must have a plan for comments, she says.

It is critical for broadcasters to sit down and say: “Here is what we want, here is how we want our audience to engage, and here is how as a brand we want our comments to reflect on us,” Adams says.

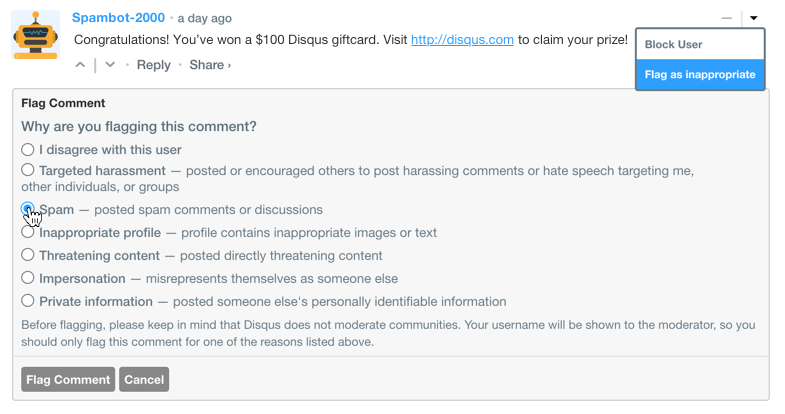

Commenters can flag comments posted by other Disqus users.

“If you don’t have a policy for comments, you’re not paying any attention to your audience. You’re saying you don’t care about your brand,” she adds. Perceptions are changing from the ideas that “comments are a cesspool or nobody reads them anyway.”

In fact, she says, people tend to spend a lot of time in the comments and ignoring that is tantamount to “ignoring your audience.”

Avoiding Toxicity

Toxic comments can deter others from joining the conversation in the first place, says Adi Jain, VP for the Disqus comment moderation product.

Readers who see toxic comments, are “27% less likely to comment,” Jain says.

Block says Graham has used Viafoura’s artificial intelligence-powered moderation tools for several years as a way to encourage audience engagement and gain control over audience participation.

“We’re trying to build this the right way, to build a civil and productive conversation space,” Block says.

It seems to be working, he says, because commenters are policing fellow commenters to keep the tone of the comments appropriate for the site.

Even with moderation tools and an engaged audience flagging up problematic posts that had made it through the filters, editors still had “an around-the-clock job just to get rid of the worst of the worst comments,” he says.

As a result, Graham signed on for full-service human moderation to supplement the automated moderation tools for comments across all the Graham sites.

With these tools in place, Graham has seen a “huge” increase in engagement, particularly with interest to potentially contentious topics like COVID, Black Lives Matter and the election.

All readers who wish to comment must register through the Graham website, and Block estimates the lifetime value of digital users is “extraordinarily” valuable because they visit the site more often, click ads more frequently and watch more videos than those who don’t register.

“They do everything that we want them to do at a much higher rate than unknown users,” Block says.

But not every story should have comments enabled. According to Mungeam, it’s essential to “exercise editorial discretion” about when to allow comments. For instance, he says, certain types of stories, like car crashes, didn’t lead to meaningful conversation while stories about funding schools can lead to civic dialogue that is relevant.

AI-Enabled Tools

When comments are enabled on a story, automated moderation tools can catch the vast majority of toxic or otherwise inappropriate comments. AI-powered filtering tools recognize things like hate speech, profanity and threats and prevent them from appearing on the comments feed.

AI is great for quickly and easily removing or holding back comments that shouldn’t post, Losowsky says. But AI on its own is not enough to handle comment moderation. Even social media platforms like Facebook use human moderators, he says.

“It’s artificial intelligence-powered human interaction, not artificial intelligence running the whole thing,” he says.

Artificial intelligence, machine learning and natural language processing all come together to continuously improve the moderation algorithms used by Viafoura’s Moderation Console tool.

Its Intelligent Auto Moderation Engine filters out the comments that shouldn’t post, identifies the comments that would benefit from human review and allows the rest of the comments to post. This approach allows broadcasters to focus their resources on the comments that have the greatest likelihood of being risky, Adams says. Typically, 80% to 90% of comments that aren’t rejected by the filter go live, with the rest being reviewed by humans, she says.

“The bigger that net is, the more you’ll catch,” Adams says.

But a word that might be fine in one context might not be for another, she notes.

“’Stupid’ is one that comes up a lot, like ‘this whole political storm is stupid’ versus ‘you’re stupid,’ ” Adams says.

Further complicating the algorithms, she says, is the fact that the words and phrases used by different audiences and regions vary. Algorithms are tailored to individual clients, she says, to address those local market nuances, such as nicknames for athletes or politicians.

These algorithms can also be tweaked to handle the types of comments associated with different types of stories, she says. For example, broadcasters covering the George Floyd story required specific rule sets for the comment moderation algorithm due to early toxicity of the comments.

“Within a week it turned around,” Adams says. “The editors were seeing the level of toxicity had dropped significantly.”

Disqus’ Jain says his product follows a three-step strategy, relying first on automated tools to catch bad content before anyone has to see it, then on users to flag inappropriate content and finally to human moderators to look at questionable content.

Improving Automation

Over the years, the automation element of moderation has improved significantly, Jain says. Video image analysis tools, for instance, can identify things like nudity and weapons. Text-based analysis is learning to identify profanity, insults, toxicity, threats and hate speech and discern intent, he says.

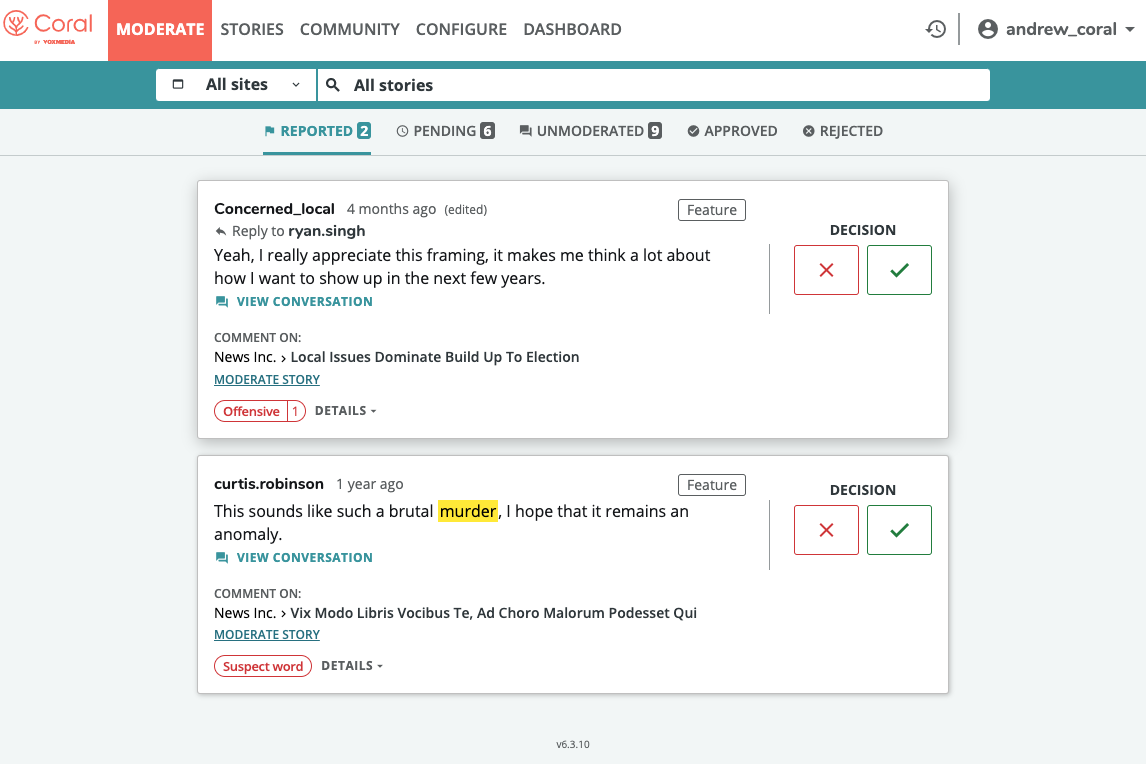

Coral’s moderation system allows human moderators to approve or deny comments. (Source: Coral by Vox Media)

Thanks to machine learning and natural language processing, he says, the automation tools can “start to moderate what the moderator will do.” For example, “the majority of the time there is a comment of this sort, the moderator approves it or deletes it.”

Disqus is also starting to look at the moment the comment is created, Jain says.

“If we can determine how toxic a comment is [after it’s submitted], we can determine how toxic it is when they type it,” he says.

This is, he says, one of the most exciting tools being created right now in the world of comment moderation. The tool can alert the user stating that their comment is likely to be taken the wrong way and allow them to modify the comment, he says. Currently, Disqus is “exploring and evaluating the concept and doing lots of testing and data evaluation,” so no product is yet available to the public.

“When you have so many commenters, you’re going to see bad actors,” Jain says. The question is whether they’re motivated by a specific piece of content or whether they’re having a bad day.

Disqus scores users on their actions across the various networks. They track things like how much the user contributes to discussions, how many likes they’ve given or received and how many comments have been flagged for toxicity or use of restricted words.

“We can track the same user going from site to site to site, spewing the same thing,” Jain says.

Whatever third-party moderation tool is used, Mungeam says, it’s showing the media company’s belief in the worth of what users have to say. “It’s a commitment in time, technology and money,” he says. “It’s a commitment to believing that there’s true value in commenting conversations.”

Costs And Revenue Prospects

That investment can pay off financially for broadcasters, although experts disagree with whether comments should be monetized through advertising.

Losowsky says Coral’s commenting platform is not carrying advertising based on the view that surveillance technology on the platform serves advertisers more than it serves the broadcaster.

“It pulls readers away from our site to the advertiser’s site,” he says.

The Coral moderation product is available as an SaaS model and pricing depends on the size of the site and its user community.

Disqus and Viafoura, on the other hand, offer both free and paid moderation models.

According to Adams, using the auto-moderation tools, which are available either as a paid SaaS offering or a free ad-based revenue sharing model, and Viafoura’s trained moderators can reduce in-house moderation costs by up to 80%. Costs for the service depend on number of comments, level of moderation and whether trained moderators are working around the clock or only certain hours. Graham is using the SaaS model for auto-moderation.

Disqus’ free model is ad-supported with revenue sharing, Jain says, and the company offers that in an effort to ensure clean comment communities are available to the majority of users.

Broadcasters can choose types of ads that are not permitted in this model, he says, and advertisers are becoming increasingly aware of the content on the sites they’re advertising on.

“It’s not just the story but the comments as well, so strong moderation is key,” Jain says.

The paid SaaS version allows publishers to customize and brand the comment area.

Whether the moderated commenting platform is running on a free or paid model, Jain says, audiences tend to spend 35% more time on the site with more than half of readers scrolling and reading comments.

“There’s a lot of eyeballs,” Jain says. “The amount of engagement, and the amount of time on the site are the largest drivers for success on the web today.”

Comments (0)