Automated Captioning Leaps Forward, Improving Its Business Case

The technologies that underpin automated closed captioning accuracy continue to improve while costs decrease, helping broadcasters caption programming beyond that required by regulations.

While governmental regulations drive some of the closed captioning that broadcasters provide viewers with their content, broadcasters have solid business reasons for ensuring as much of their content is captioned as possible. Vendors are harnessing technologies like artificial intelligence (AI), machine learning and natural language understanding and processing to continually improve the captioning accuracy of their offerings.

To deliver accurate closed captioning, these products and services must overcome challenges like ambient noises in the audio, accents and mumbled voices as well as understand slang and local names. More broadcasters are trusting automated captioning, and vendors believe automated services could soon replace the need for human closed captioning services.

Paul Shen, CEO of TVU Networks, says more broadcasters than ever want to ensure all of their content is closed captioned, not just content that is required by the FCC and regulatory agencies in other countries to include closed captioning. One reason, he says, is that it can help them broaden their audiences by helping them reach viewers they couldn’t otherwise reach.

As Bill Bennett, media solutions account manager for ENCO, puts it, “There’s a far bigger audience than what’s regulated by the FCC,” and captioning helps bring that content to more audiences.

Voice Interaction CEO Joao Neto says creators not regulated by the FCC or similar agencies still want to provide captioning for all their content “because it’s a good service. What they are facing is the problem of cost.”

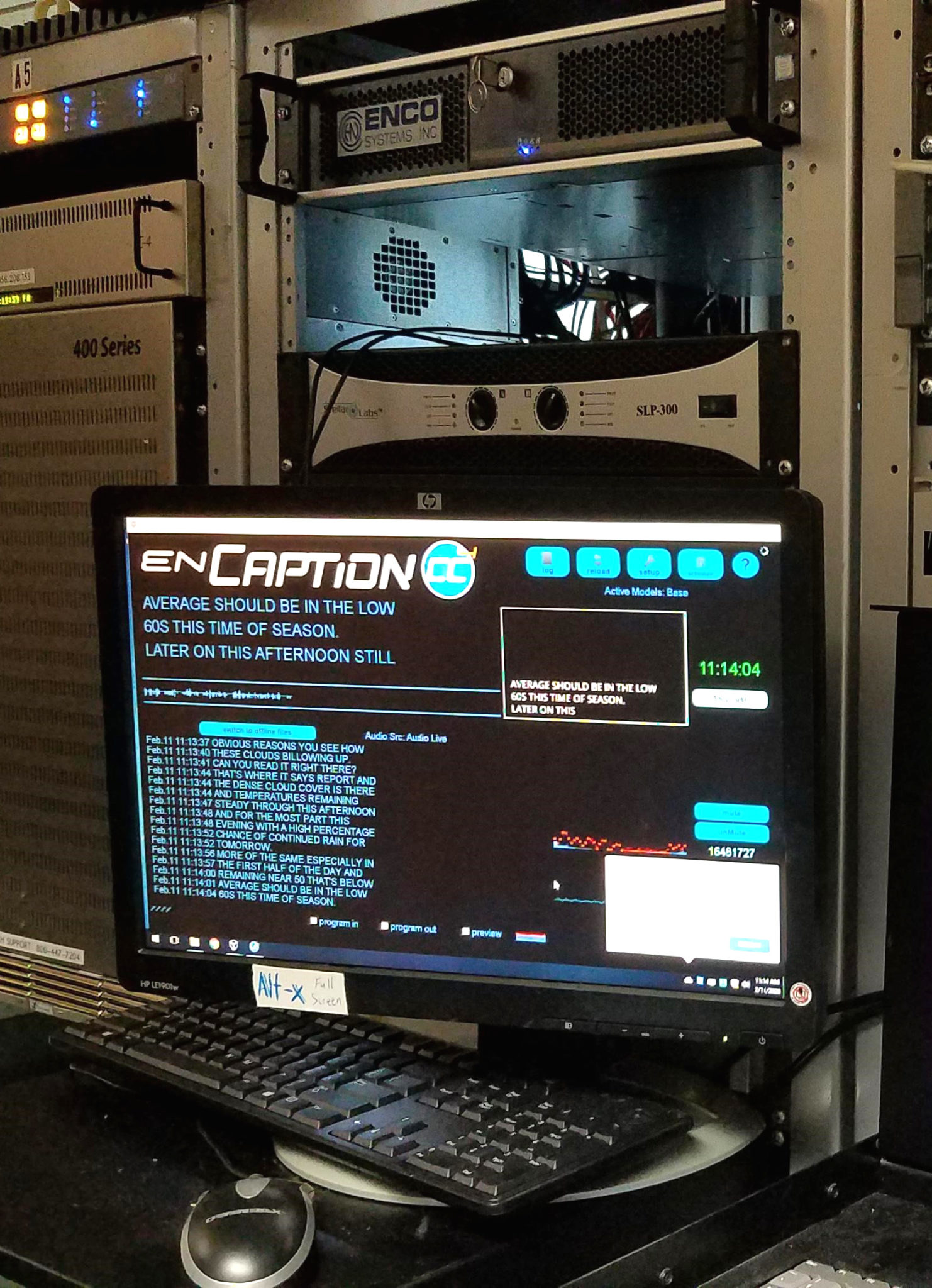

ENCO’s enCaption in use at KTBS (ABC) and KPXJ (CW) in Shreveport, La.

Rich Anderson, captioning offering manager at IBM, says it’s clear that captions aren’t just for the deaf or hard of hearing.

People in a crowded room can watch video without sound but with captions without annoying others. Multiple televisions in a venue like a sports bar can run different captioned channels simultaneously for a variety of viewers.

In short, Anderson says, the stations “want to provide these services for whatever reason the viewership wants it.”

Ed Hauber, who manages business development for Digital Nirvana, says there are additional applications for captioning technologies that broadcasters can take advantage of, such as workflow integration with third-party tools and speeding up production through automated metadata.

Improving Accuracy

Technologies like AI, machine learning and natural language understanding and processing are constantly improving the accuracy of speech to text services, but without custom models, accuracy levels aren’t always as high as broadcasters require to meet regulations for closed captioning services.

“It’s easy to have a speech recognition system that kind of gets it right 80% of the time, but that’s not good enough,” Bennett says.

Accuracy in speech to text can be complicated by a number of things, including ambient noise like a rumbling subway or jackhammer or cheering crowds, mumbling, accents and unique words and names, he notes.

Deep machine learning helps ENCO’s enCaption understand the same word when pronounced by people with different accents, Bennett says. Some sounds like a subway are ignored by enCaption, he says.

“A lot of the noise we hear, it doesn’t hear outside of the vocal range,” he says. At the same time, it can “listen through the mumbled sounds of the mask and still understand what they’re saying.”

According to Bennett, enCaption can pick through custom word libraries of local names in addition to a general dictionary of 1 million words for word selection. Statistical analysis of what words often work together can help drive up accuracy in speech to text, Bennett says.

“Statistical analysis helps it get better when it’s not sure about what it hears,” Bennett says.

There are other audio challenges as well, notes IBM’s Anderson. For instance, virtual interviews and phone videos don’t always provide “clean audio.”

It’s possible to filter out sounds to remove background noise and use AI to assess captioned content, he adds.

“There are lots of avenues and angles you can look at, like, ‘does this look like this is correct?’” Anderson says.

Automated captioning is “not at 100% accuracy, but that’s our goal,” he says.

Watson Captioning Live is constantly incrementally improving with a global news language model that combines with hyperlocalized custom language models for each station, Anderson says. Watson Captioning On Demand is currently a custom solution that is on the road map to be added to the Live platform, he says.

Having captions appear with the appropriate audio is also important.

Shen says TVU Transcriber’s automated captioning system buffers the audio briefly in order to synchronize the closed captioning text with the audio.

“The result is the user doesn’t see a delay. It’s perfectly synched,” Shen says.

TVU Transcriber is a plug-and-play, real-time speech-to-text transcribing service that leverages AI voice recognition technology.

Two of the major recent breakthroughs with closed captioning technology have been ability to function despite noisy environments and the ability to recognize speakers, Shen says. Now, he says, one of the bigger challenges is for systems to provide quality captions in the presence of mixed languages, which is common in India where people may use both Hindi and English words in the same sentence.

Shen says TVU is working on this problem with its partners and believes this “will be addressed shortly.”

Improving Speed and Trust

Accuracy is important, but so are speed and trust.

Software technology is able to parse text then deliver captions in near-instantaneous time frames, says Digital Nirvana’s Hauber. Over the last few years, he says, the accuracy and speed of this technology have “really improved” for both offline and live content.

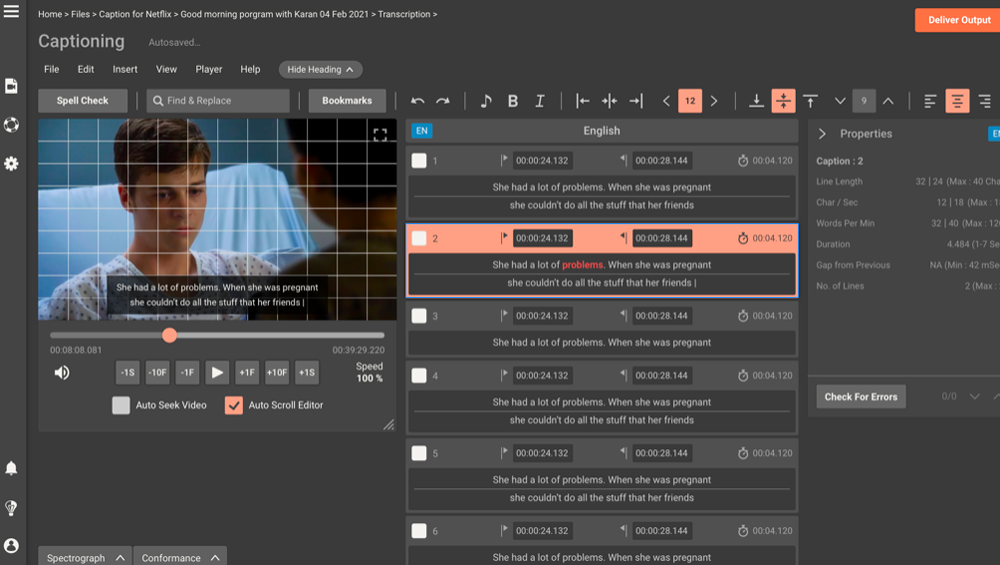

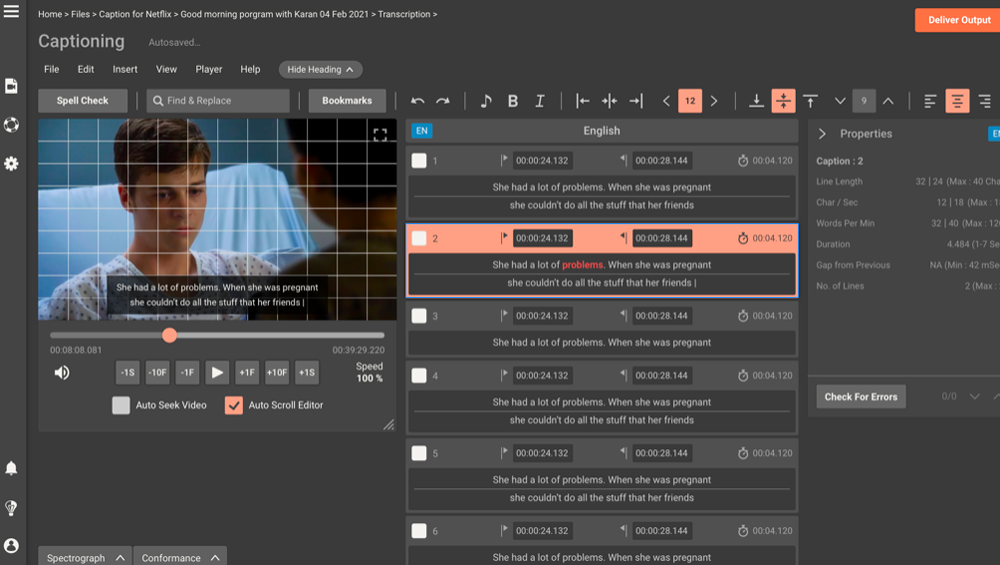

Digital Nirvana’s Trance closed captioning system leverages natural language understanding and natural language processing to help it understand the context, emotion and intent of content, Hauber says.

“This allows us to create the most accurate transcripts and captions, and it prevents the user from having to do a bunch of heavy lifting themselves,” Hauber says.

This technology can also be used for tasks like assigning metadata to content so users can more readily find media for story packages, he says.

Neto says he’s seen more and more smaller market stations embracing the use of automated closed captioning services as a means to provide more captioned content at lower costs than human-supplied captions. The cost of automated captioning is more affordable for them, he says, while still providing an acceptable level of accuracy, quality and latency.

“It’s an investment that makes sense to a small station,” Neto adds. At the same time, he is starting to “see a shift between the manual operations to an automatic system in the top 25 markets.”

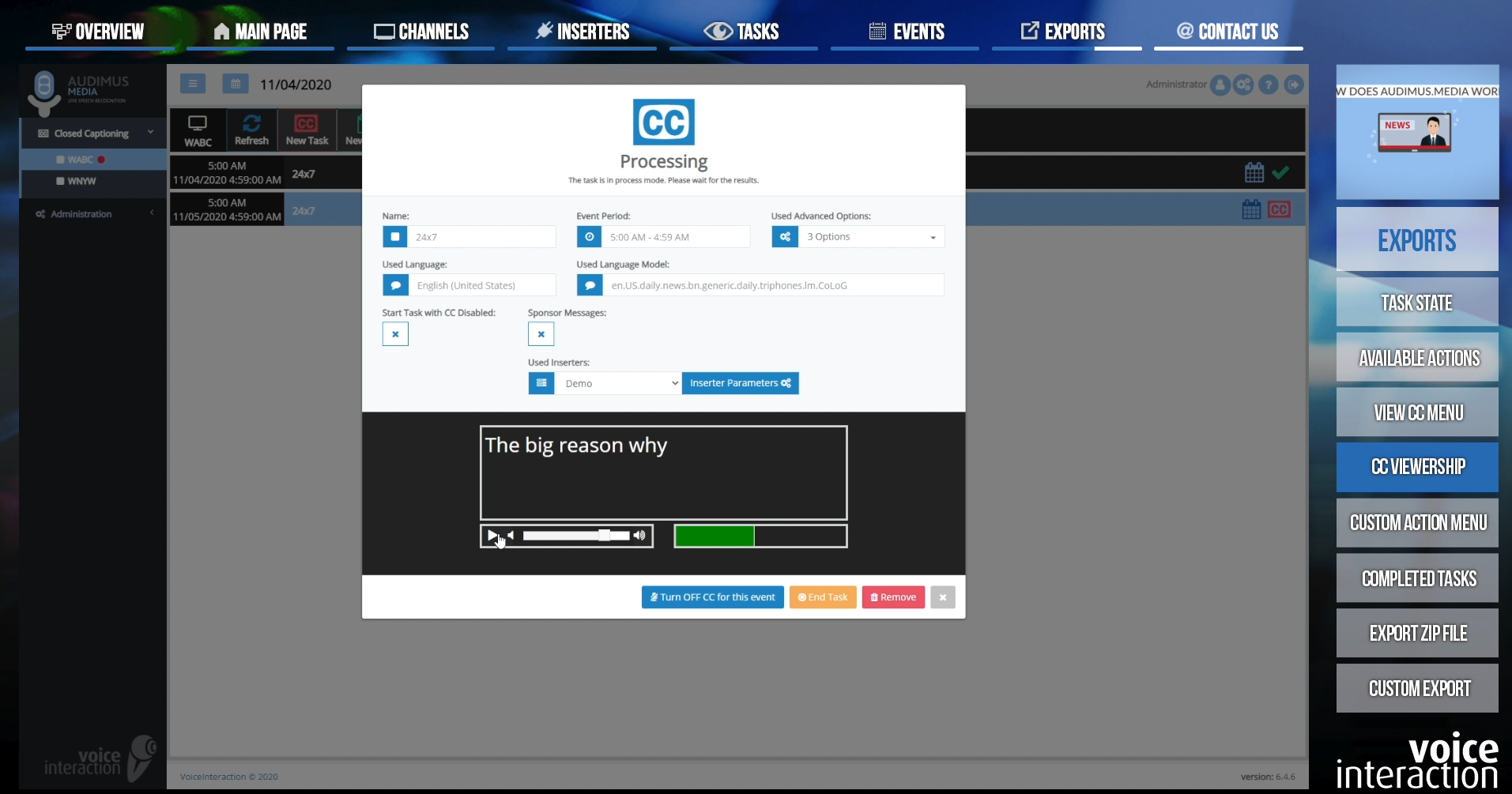

The Audimus.Media Interface showing closed caption content being generated.

Stations that start using Voice Interaction’s Audimus.Media often start off with a hybrid closed captioning solution that involves a mix of manual, teleprompter and automated captions, he says. At this point, the automated captioning is around 90% to 92% accurate, he says. Following training to localize the model, accuracy increases to 96% to 98%, he says.

As Neto puts it, “these systems are learning from data, so they are always improving.”

And unlike human closed captioning specialists, they don’t need coffee breaks, holidays or weekends, Neto adds.

The technology, Shen says, is “getting to the point where it’s better than a lot of humans.” Which Hauber says is the highest accuracy bar to cross — real-time automated closed captioning accurate enough to replace a human.

“The technology is getting there,” Hauber says. “We’re right on the cusp of being able to do that.”

Comments (0)